-

Leaky ReLU Activation Function.

Leaky ReLU Activation Function. Table Of Contents: What Is Leaky ReLU Activation Function? Formula & Diagram For Leaky ReLU Activation Function. Where To Use Leaky ReLU Activation Function? Advantages & Disadvantages Of Leaky ReLU Activation Function? (1) What Is Leaky ReLU Activation Function? The Leaky ReLU (Rectified Linear Unit) activation function is a modified version of the standard ReLU function that addresses the “dying ReLU” problem, where ReLU neurons can become permanently inactive. The Leaky ReLU introduces a small slope for negative inputs, allowing the neuron to respond to negative values and preventing complete inactivation. (2) Formula & Diagram For

-

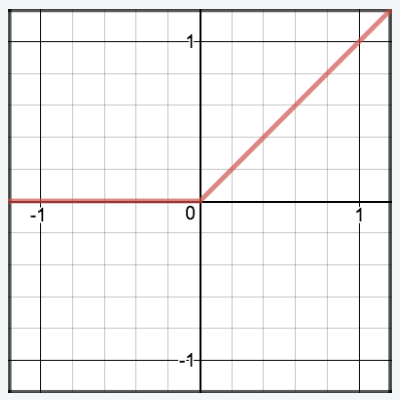

Rectified Linear Unit (ReLU) Activation:

Rectified Linear Unit (ReLU) Activation: Table Of Contents: What Is Rectified Linear Unit (ReLU) Activation? Formula & Diagram For Rectified Linear Unit (ReLU) Activation. Where To Use Rectified Linear Unit (ReLU) Activation? Advantages & Disadvantages Of Rectified Linear Unit (ReLU) Activation. (1) What Is Rectified Linear Unit (ReLU) Activation? Returns the input if it is positive; otherwise, outputs zero. Simple and computationally efficient. Popular in deep neural networks due to its ability to alleviate the vanishing gradient problem. (2) Formula & Diagram For Rectified Linear Unit (ReLU) Activation. Formula: Diagram: (3) Where To Use Rectified Linear Unit (ReLU) Activation. Hidden

-

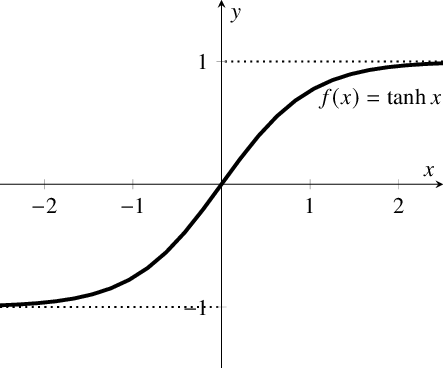

Hyperbolic Tangent (tanh) Activation.

Hyperbolic Tangent (tanh) Activation. Table Of Contents: What Is Hyperbolic Tangent (tanh) Activation? Formula & Diagram For Hyperbolic Tangent (tanh) Activation. Where To Use Hyperbolic Tangent (tanh) Activation? Advantages & Disadvantages Of Hyperbolic Tangent (tanh) Activation. (1) What Is Hyperbolic Tangent (tanh) Activation? Maps the input to a value between -1 and 1. Similar to the sigmoid function but centred around zero. Commonly used in hidden layers of neural networks. (2) Formula & Diagram For Hyperbolic Tangent (tanh) Activation Formula: Diagram: (3) Where To Use Hyperbolic Tangent (tanh) Activation? Hidden Layers of Neural Networks: The tanh activation function is often