-

Softmax Activation Function

Softmax Activation Function Table Of Contents: What Is SoftMax Activation Function? Formula & Diagram For SoftMax Activation Function. Why Is SoftMax Function Important. When To Use SoftMax Function? Advantages & Disadvantages Of SoftMax Activation Function. (1) What Is SoftMax Activation Function? The Softmax Function is an activation function used in machine learning and deep learning, particularly in multi-class classification problems. Its primary role is to transform a vector of arbitrary values into a vector of probabilities. The sum of these probabilities is one, which makes it handy when the output needs to be a probability distribution. (2) Formula & Diagram

-

ReLU vs. Leaky ReLU vs. Parametric ReLU.

ReLU vs. Leaky ReLU vs. Parametric ReLU Table Of Contents: Comparison Between ReLU vs. Leaky ReLU vs. Parametric ReLU. Which One To Use At What Situation? (1) Comparison Between ReLU vs. Leaky ReLU vs. Parametric ReLU ReLU, Leaky ReLU, and Parametric ReLU (PReLU) are all popular activation functions used in deep neural networks. Let’s compare them based on their characteristics: Rectified Linear Unit (ReLU): Activation Function: f(x) = max(0, x) Advantages: Simplicity: ReLU is a simple and computationally efficient activation function. Sparsity: ReLU promotes sparsity by setting negative values to zero, which can be beneficial in reducing model complexity. Disadvantages:

-

Exponential Linear Units (ELU) Activation Function.

Exponential Linear Unit Activation Function Table Of Contents: What IsExponential Linear Unit Activation Function? Formula & Diagram For Exponential Linear Unit Activation Function. Where To Use Exponential Linear Unit Activation Function? Advantages & Disadvantages Of Exponential Linear Unit Activation Function. (1) What Is Exponential Linear Unit Activation Function? The Exponential Linear Unit (ELU) activation function is a type of activation function commonly used in deep neural networks. It was introduced as an alternative to the Rectified Linear Unit (ReLU) and addresses some of its limitations. The ELU function introduces non-linearity, allowing the network to learn complex relationships in the data.

-

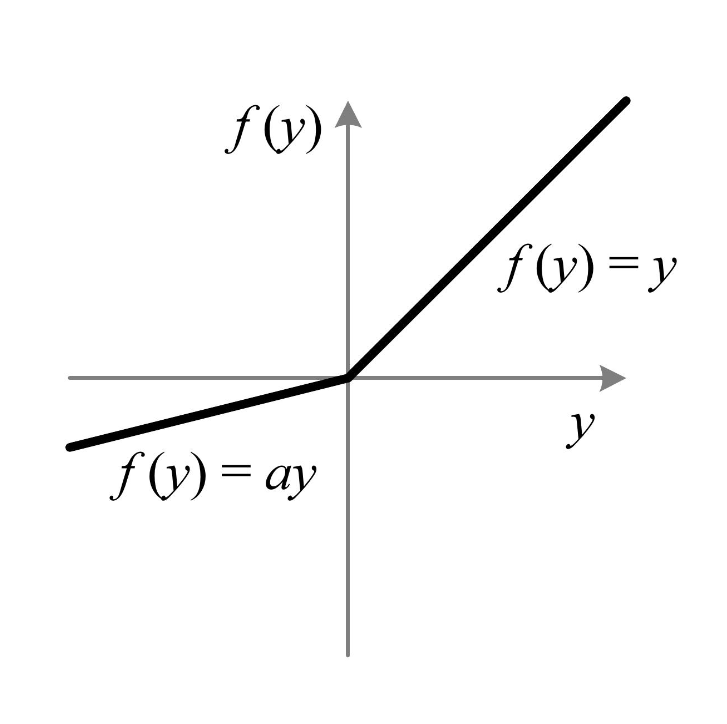

Parametric ReLU (PReLU) Activation Function

Parametric ReLU (PReLU) Activation Function Table Of Contents: What Is Parametric ReLU (PReLU) Activation Function? Formula & Diagram For Parametric ReLU (PReLU) Activation Function. Where To Use Parametric ReLU (PReLU) Activation Function? Advantages & Disadvantages Of Parametric ReLU (PReLU) Activation Function. (1) What Is Parametric ReLU (PReLU) Activation Function? The Parametric ReLU (PReLU) activation function is an extension of the standard ReLU (Rectified Linear Unit) function that introduces learnable parameters to control the slope of the function for both positive and negative inputs. Unlike the ReLU and Leaky ReLU, which have fixed slopes, the PReLU allows the slope to be