-

Gradient Descent Optimizer

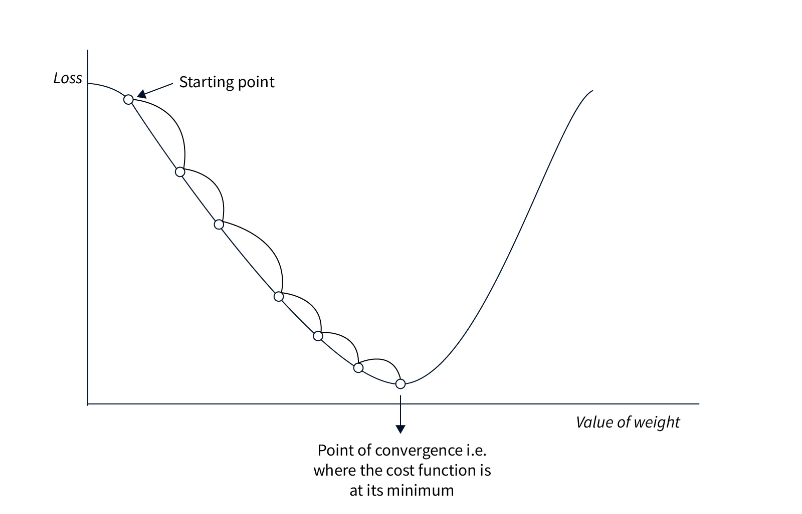

Gradient Descent Optimizer Table Of Contents: What Is Gradient Descent Algorithm? Basic Gradient Descent Algorithms. Batch Gradient Descent. Stochastic Gradient Descent (SGD). Mini-Batch Gradient Descent. Learning Rate. What Is The Learning Rate? Learning Rate Scheduling. Variants of Gradient Descent. Momentum. Nesterov Accelerated Gradient (NAG). Adagrad. RMSprop. Adam. Optimization Challenges Local Optima Plateaus and Vanishing Gradients. Overfitting. (1) What Is Gradient Descent Algorithm? The Gradient Descent algorithm is an iterative optimization algorithm used to minimize a given function, typically a loss function, by adjusting the parameters of a model. It is widely used in machine learning and deep learning to update