-

Vanishing & Exploding Gradient Problem In RNN.

Vanishing & Exploding Gradient Table Of Contents: Vanishing & Exploding Gradient. What Is Vanishing Gradient? What Is Exploding Gradient? Solution To Vanishing & Exploding Gradient. (1) Vanishing & Exploding Gradient The vanishing and exploding gradient problems are common challenges in training recurrent neural networks (RNNs). These problems occur when the gradients used to update the network’s parameters during training become either too small (vanishing gradients) or too large (exploding gradients). This can hinder the convergence of the training process and affect the performance of the RNN model. (2) Vanishing Gradient During backpropagation, the calculation of (partial) derivatives/gradients in the weight update formula follows

-

Recurrent Neural Network.

Recurrent Neural Network Table Of Contents: What Are Recurrent Neural Networks? The Architecture of a Traditional RNN. Types Of Recurrent Neural Networks. How Does Recurrent Neural Networks Work? Common Activation Functions. Advantages And Disadvantages of RNN. Recurrent Neural Network Vs Feedforward Neural Network. Backpropagation Through Time (BPTT). Two Issues Of Standard RNNs. RNN Applications. Sequence Generation. Sequence Classification. (1) What Is Recurrent Neural Network? Recurrent Neural Network(RNN) is a type of Neural Network where the output from the previous step is fed as input to the current step. In traditional neural networks, all the inputs and outputs are independent of each other.

-

Why ANN Can’t Be Used In Sequential Data?

Why ANN Can’t Be Used In Sequential Data? Table Of Contents: Input Text Having Varying Size. Zero Padding – Unnecessary Computation. Prediction Problem On Different Input Length. Not Considering Sequential Information. Reason-1:Input Text Having Varying Size. In real life, input sentences will have different word counts. Suppose you make an ANN having the below structure. It has 3 input nodes. Our first sentence contains 6 words, hence the weight metrics will be 6 * 3 structure. The second sentence contains 3 words hence the weight metrics will be 3 * 3 structure. The third sentence contains 4 words hence the weight

-

Sequential Data

Gradient Descent Optimizer Table Of Contents: What Is Sequential Data? Key Characteristics Of Sequential Data. Examples Of Sequential Data. Why Is Sequential Data Is An Issue For The Traditional Neural Network? (1) What Is Sequential Data? Sequential data is data arranged in sequences where order matters. Data points are dependent on other data points in this sequence. Examples of sequential data include customer grocery purchases, patient medical records, or simply a sequence of numbers with a pattern. In sequence data, each element in the sequence is related to the ones that come before and after it, and the way these

-

Gradient Descent Optimizer

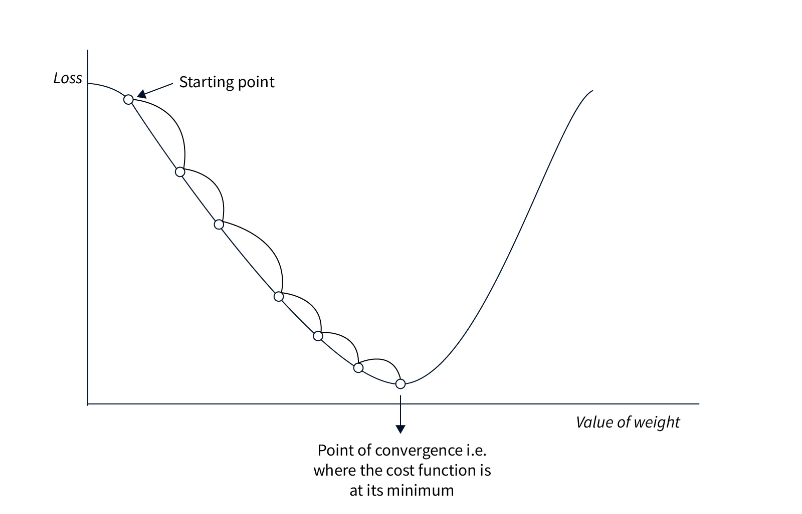

Gradient Descent Optimizer Table Of Contents: What Is Gradient Descent Algorithm? Basic Gradient Descent Algorithms. Batch Gradient Descent. Stochastic Gradient Descent (SGD). Mini-Batch Gradient Descent. Learning Rate. What Is The Learning Rate? Learning Rate Scheduling. Variants of Gradient Descent. Momentum. Nesterov Accelerated Gradient (NAG). Adagrad. RMSprop. Adam. Optimization Challenges Local Optima Plateaus and Vanishing Gradients. Overfitting. (1) What Is Gradient Descent Algorithm? The Gradient Descent algorithm is an iterative optimization algorithm used to minimize a given function, typically a loss function, by adjusting the parameters of a model. It is widely used in machine learning and deep learning to update

-

Cost Functions In Deep Learning.

Cost Functions Table Of Contents: What Is A Cost Function? Mean Squared Error (MSE) Loss. Binary Cross-Entropy Loss. Categorical Cross-Entropy Loss. Custom Loss Functions. Loss Functions for Specific Tasks. Loss Functions for Imbalanced Data. Loss Function Selection. (1) What Is A Cost Function? A cost function, also known as a loss function or objective function, is a mathematical function used to measure the discrepancy between the predicted output of a machine learning model and the actual or target output. The purpose of a cost function is to quantify how well the model is performing and to guide the optimization process

-

Optimization Algorithms In Deep Learning.

Optimization Algorithms For Neural Networks Table Of Contents: What Is An Optimization Algorithm? Gradient Descent Variants: Batch Gradient Descent (BGD) Stochastic Gradient Descent (SGD) Mini-Batch Gradient Descent Convergence analysis and trade-offs Learning rate selection and scheduling Adaptive Learning Rate Methods: AdaGrad RMSProp Adam (Adaptive Moment Estimation) Comparisons and performance analysis Hyperparameter tuning for adaptive learning rate methods Momentum-Based Methods: Momentum Nesterov Accelerated Gradient (NAG) Advantages and limitations of momentum methods Momentum variants and improvements Second-Order Methods: Newton’s Method Quasi-Newton Methods (e.g., BFGS, L-BFGS) Hessian matrix and its computation Pros and cons of second-order methods in deep learning Optimization Challenges and

-

Feedforward Neural Networks

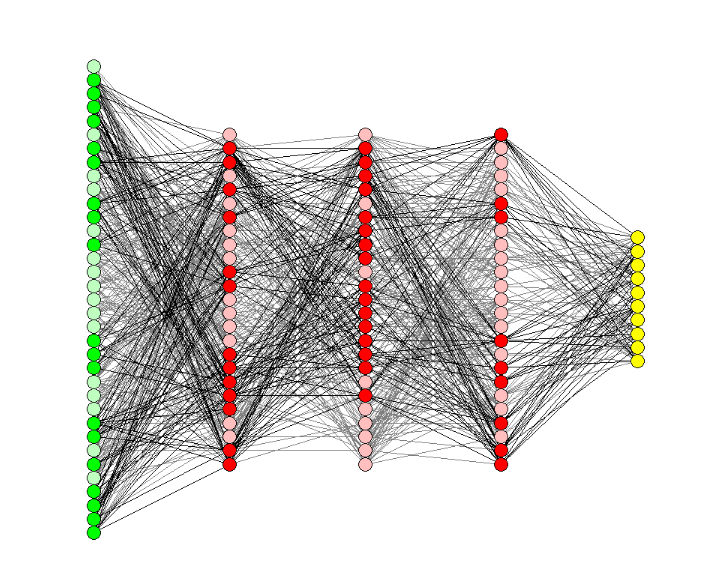

Feedforward Neural Network Table Of Contents: What Is Feedforward Neural Network? Why Are Neural Networks Used? Neural Network Architecture And Operation. Components Of Feedforward Neural Network. How Feedforward Neural Network Works? Why Does This Strategy Works? Importance Of Non-Linearity. Applications Of Feed Forward Neural Network. (1) What Is Feedforward Neural Network? The feed-forward model is the simplest type of neural network because the input is only processed in one direction. The data always flows in one direction and never backwards, regardless of how many buried nodes it passes through. A feedforward neural network, also known as a multilayer perceptron (MLP), is a

-

Softmax Activation Function

Softmax Activation Function Table Of Contents: What Is SoftMax Activation Function? Formula & Diagram For SoftMax Activation Function. Why Is SoftMax Function Important. When To Use SoftMax Function? Advantages & Disadvantages Of SoftMax Activation Function. (1) What Is SoftMax Activation Function? The Softmax Function is an activation function used in machine learning and deep learning, particularly in multi-class classification problems. Its primary role is to transform a vector of arbitrary values into a vector of probabilities. The sum of these probabilities is one, which makes it handy when the output needs to be a probability distribution. (2) Formula & Diagram

-

ReLU vs. Leaky ReLU vs. Parametric ReLU.

ReLU vs. Leaky ReLU vs. Parametric ReLU Table Of Contents: Comparison Between ReLU vs. Leaky ReLU vs. Parametric ReLU. Which One To Use At What Situation? (1) Comparison Between ReLU vs. Leaky ReLU vs. Parametric ReLU ReLU, Leaky ReLU, and Parametric ReLU (PReLU) are all popular activation functions used in deep neural networks. Let’s compare them based on their characteristics: Rectified Linear Unit (ReLU): Activation Function: f(x) = max(0, x) Advantages: Simplicity: ReLU is a simple and computationally efficient activation function. Sparsity: ReLU promotes sparsity by setting negative values to zero, which can be beneficial in reducing model complexity. Disadvantages: