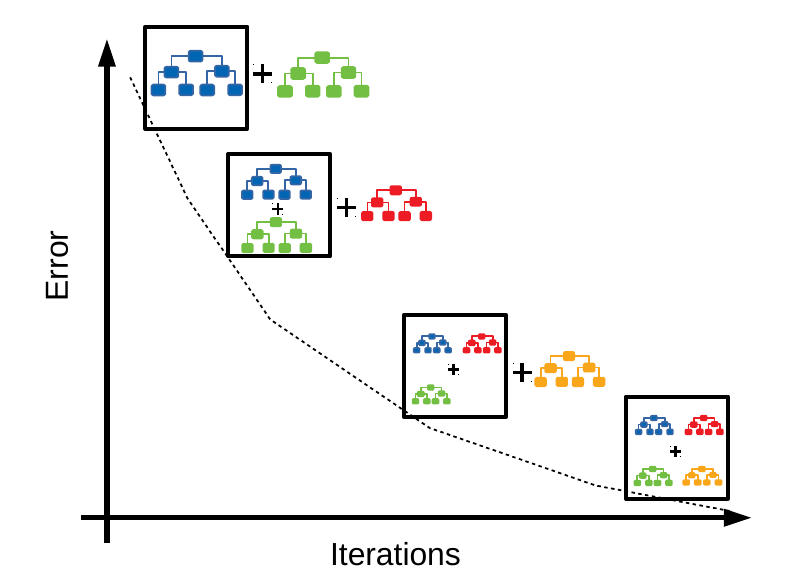

Gradient Boosting – Classification Algorithm

Table Of Contents:

- Example Of Gradient Boosting Classification.

Problem Statement:

- Whether the student will get placement or not is based on CGPA and IQ.

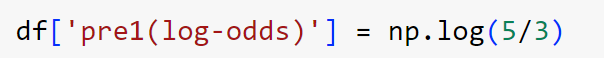

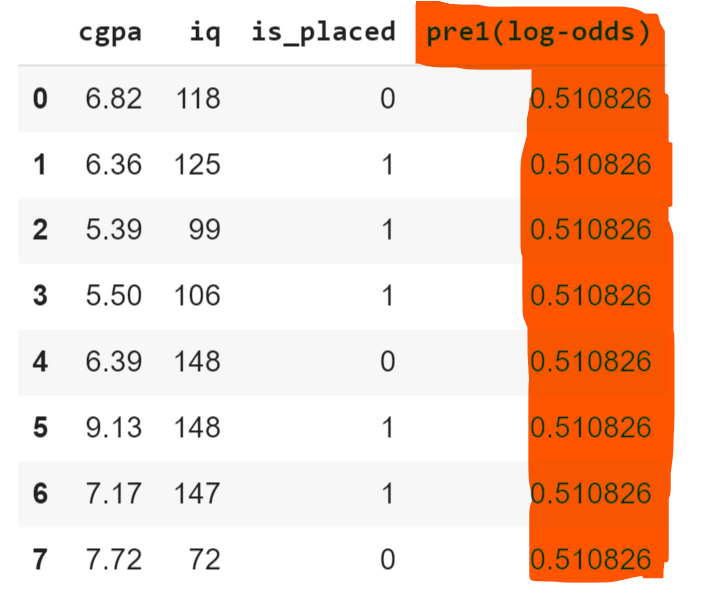

Step-1: Build First Model – Calculate Log Of Odds.

- In the case of the Boosting algorithm, the first model will be a simple one.

- For the regression case, we have considered the mean value to be our first model.

- But it will not make any sense in the case of classification.

- Hence we will consider Log(odds) as our mathematical function for the first model.

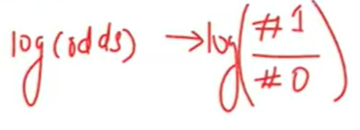

Log(Odds)

- The odds of an event happening are defined as the ratio of the probability of the event occurring to the probability of it not occurring.

- Odds Ratio = Probability of Event / Probability of Not Event

- Log Odds = log(Odds Ratio) = log(Probability of Event / Probability of Not Event)

Calculating Log(Odds) Value:

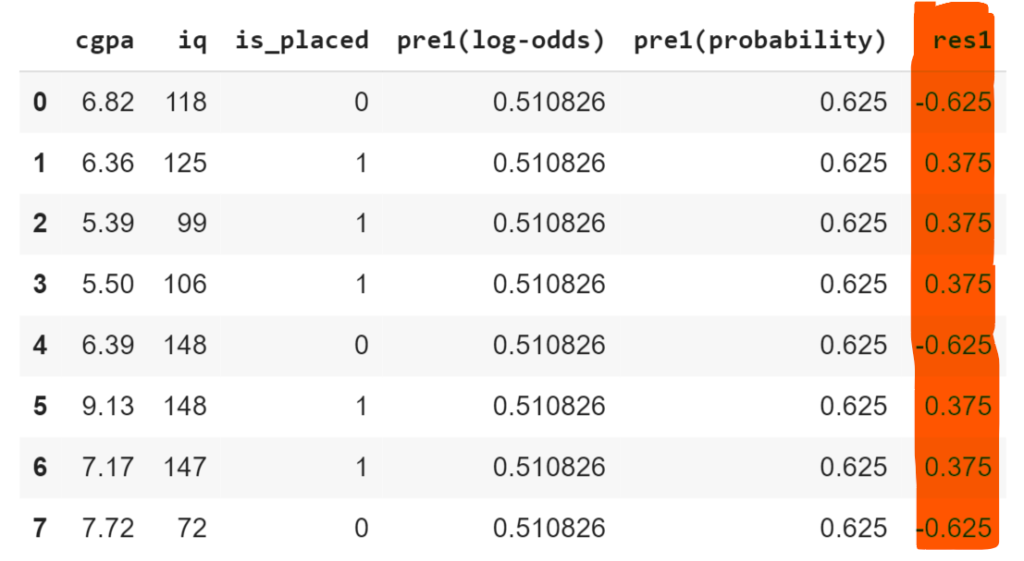

- Here we have 5 numbers of 1’s and 3 of 0’s out of 8 records.

- Hence Log of Odds will be Log(5/3).

- Here 0.51 will be the initial prediction for all the records.

Step-2: Convert Log Of Odds To Probability.

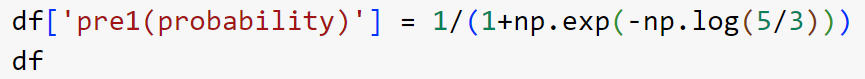

- As our output column (is_placed) is in terms of probability value we also need to convert our log-odds column to probability.

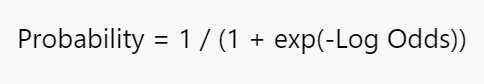

- We will use below formula for conversion.

- Here we got a probability of 0.62 for all the records.

- In other words, we can say that our first model will predict 0.62 probability for all the records irrespective of the ‘cgpa’ and ‘iq’ values.

- This result is not good hence we need to improve it in further steps.

Note:

- As this is a classification problem our output should be ‘1’ or 0′.

- Hence we will keep a threshold value above which output will be ‘1’ and below which output will be ‘0’.

- Let’s keep the threshold at 0.5.

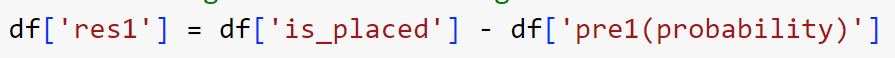

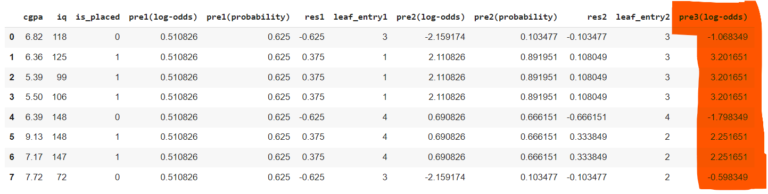

Step-3: Calculate Error Made By Model-1

- We will use simple subtraction to calculate the error value.

- We will subtract the actual value from the predicted value to calculate the error.

- Error = (is_placed – pre1(probability))

Step-4: Build Second Model

- In the boosting algorithm, we use an ‘n’ number of models to correct the error made by previous models.

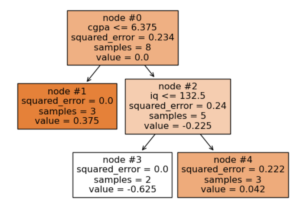

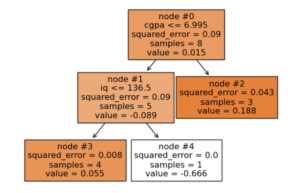

- Here our second model will be a decision tree for regression.

- The decision tree will be a weak learner, here the maximum leaf node = 3.

- Input for the decision tree will be the ‘cgpa’ and ‘iq’ values.

- But the target variable will be the error made by the model-1.

- The output of the decision tree will be the probability values.

- But the output of the Model-1 is the log of odds.

- We can not combine log of odds to probabilitiy.

- Hence we need to convert the probabilities values to a log of odds.

Step-5: Convert Probability To Log Of Odds.

- The output of the decision tree will be the probability values.

- But the output of the Model-1 is the log of odds.

- We can not combine the log of odds with probabilities.

- Hence we need to convert the probabilities values to a log of odds.

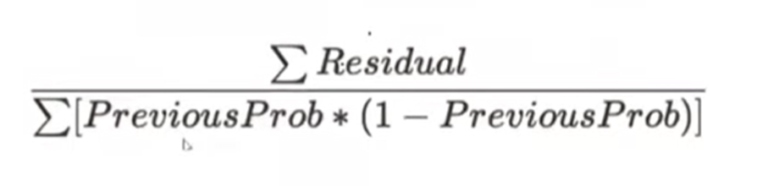

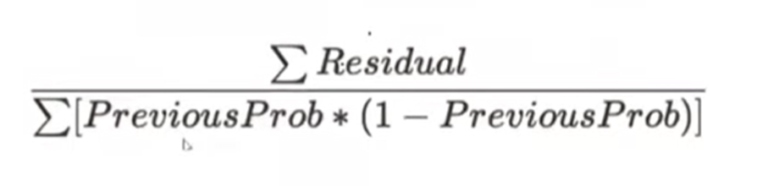

- We will use the below formula to do this operation.

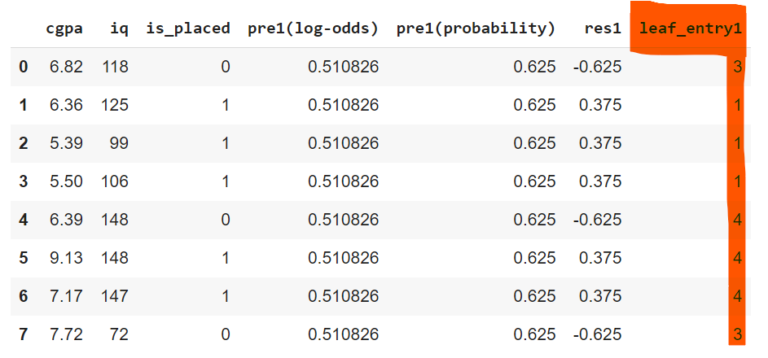

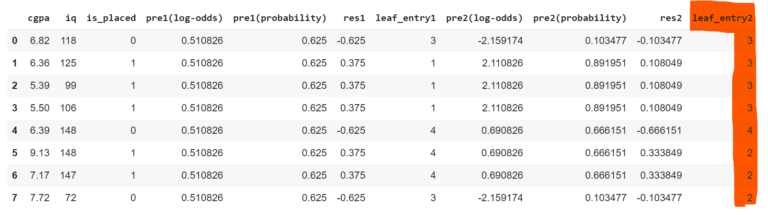

- First, we need to identify in which leaf nodes each record is falling.

- We will create a new column with the leaf node number on it.

- Let us calculate the Log of odds value for Leaf node 3.

- The Numerator = Sum Of Residuals.

- The number of records falling on Node 3 is 2 and the output probability value is -0.625.

- Hence Sum Of Residuals = -0.625 – 0.625 = -1.25

- Let’s calculate the denominator part.

- Denominator = Sum Of (Previous Prob * (1 – Previous Prob))

- Denominator = 0.625 * (1 – 0.625) + 0.625 * (1 – 0.625 ) = 0.234 * 0.234 = 0.054

- Log Odds = -1.25 / 0.054 = -2.66

- Like this we will calculate the Log of odds of all the leaf nodes.

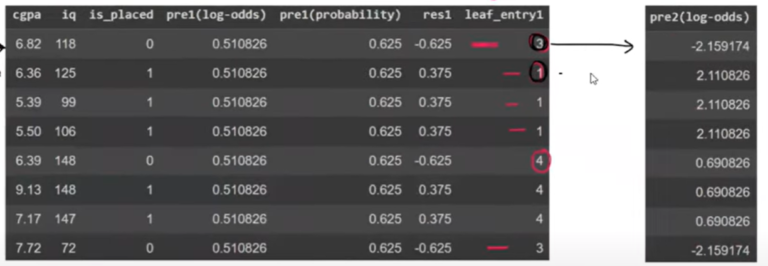

Step-6: Combine Model 1 and Model 2 Output.

- We need to combine Moedl-1 and Model-2 output to get the final output that will be passed to the next model.

- Model-1 output is 0.51 and we have calculated the output for all the leaf nodes from model-2.

- Let us combine both to get the final output.

- Here the final output will be in terms of a log of odds.

- For Row-1, Combined Output = (model-1 Output + model-2 Output).

- As Row-1 is falling under leaf node 3 the Model 2 Output is -2.66.

- Output = 0.51+(-2.66) = -2.159

- Like this, we have to calculate for all the rows.

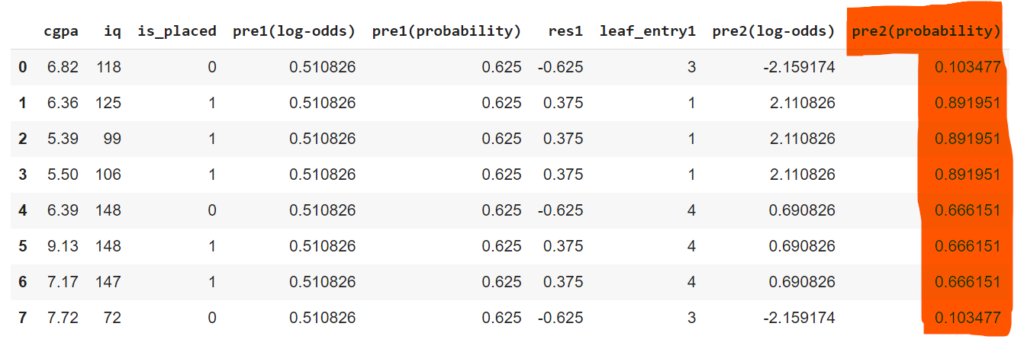

Step-7: Convert Log Of Odds To Probability

- Again we need to convert the Log of Odds to probability value to calculate the residuals.

- We will use the below formula to convert to probability value.

- If we have only these two models we can use these probability values to make our final prediction.

- If we set a cutoff of 0.5 above which we will say yes below we will say no.

- For the first record the probability = 0.103477, it is below the cut off value, it will be no.

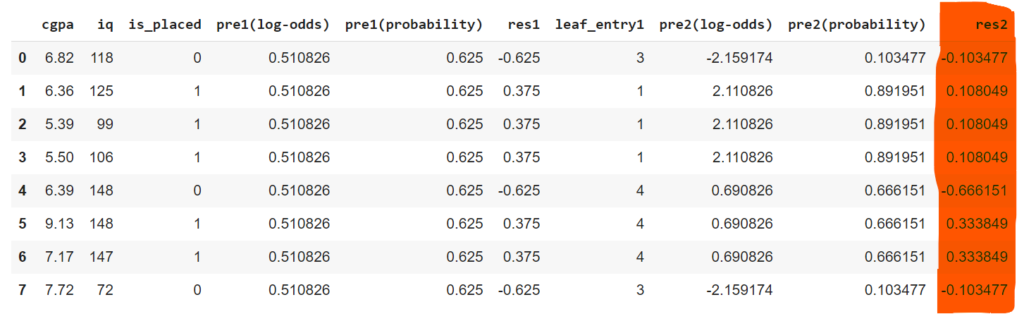

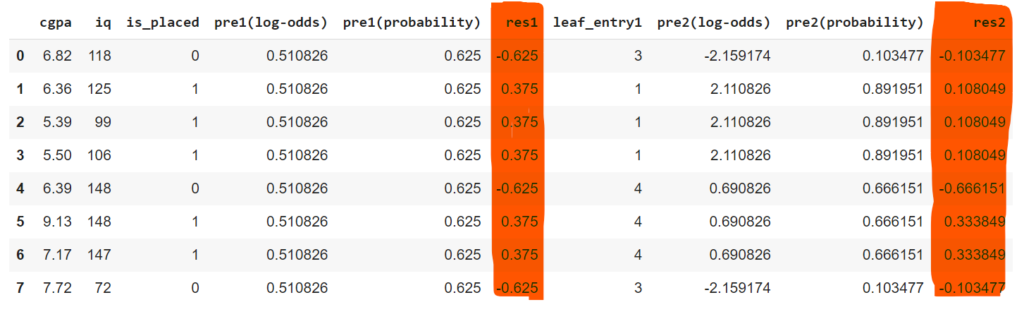

Step-8: Calculate Error Made By The Model-2

- To calculate the error we have to subtract the actual value from the predicted value.

- Error = Actual – Predicted.

- Error = is_placed – pre2(Probability)

Learning Rate:

- If you check Model-1 residual and Model-2 residual values you can see there is a high jump from -0.625 to -0.103 while getting closure to zero.

- To reduce this high jump of value you can introduce a learning rate factor.

- You have to multiply the learning rate by the log of odds output of model 1 and model 2.

- Learning Rate = 0.1

- Example: 0.51 + 0.1(1.6) = 0.51 + 0.16 = 0.67.

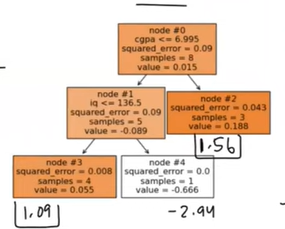

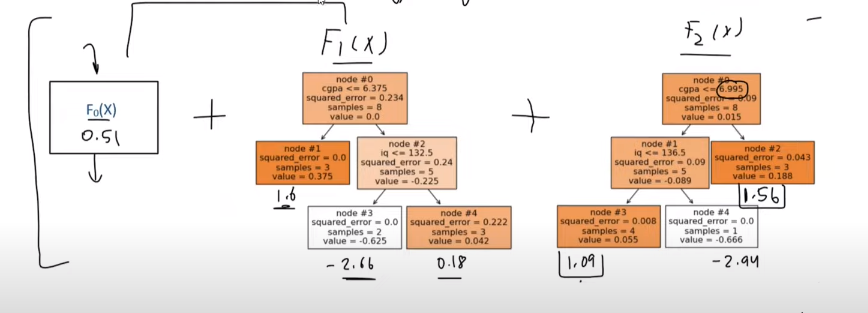

Step-9: Build Third Model

- Here our third model will be a decision tree for regression.

- The decision tree will be a weak learner, here the maximum leaf node = 3.

- Input for the decision tree will be the ‘cgpa’ and ‘iq’ values.

- But the target variable will be the error made by the model-2(res2).

Step-10: Calculate Log Of Odds For 3rd Model

- Here the values are in terms of probability.

- Here we need to convert probability to the Log of Odds for each node.

- First, we need to calculate which leaf node each record is falling.

- We will use below formula to calculate the Log of Odds value.

- To use this formula first we need to in which leaf node each record is falling.

- After finding the leaf node entry we can use the above formula to calculate the Log of Odds value.

- Here you can see the Log of Odds for each leaf node.

Step-11: Calculate Combined Log Of Odds .

- Now you can combine the log of odds of the 1st, 2nd and 3rd models to form a combined output.

- Model1 log of odds is 0.51.

- Model1 +Model2 log of odds is = pre2(log-odds)

- Model1 + Model2 + Model3 log of odds is = pre2(log-odds) + Model3 log of odds.

- For example, for 1st record,

- Model1 + Model2 + Model3 log of odds is = pre2(log-odds) + Model3 log of odds.

- 1st record is falling on leaf node 3 hence its log of odds = 1.09

- Hence combined log of odds = pre2(log-odds) + Model3 log of odds = -2.159 + 1.09 = -1.068

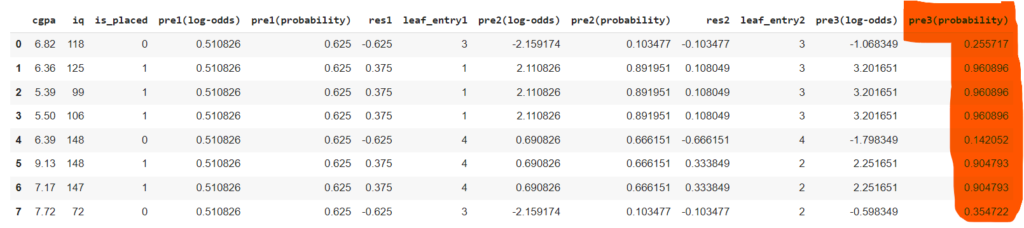

Step-12: Convert Log Of Odds To Probability.

- The output will always be in terms of probability values, hence we need to convert the log of odds to probability.

- We will use the below formula to convert the log of odds to probability.

- If you compare the is_placed and pre3(probability) columns you can see that the predicted probability values are closer to the actual probability value.

Step-13: Make Prediction For New Point

- CGPA = 7.2, IQ = 100

- Predict the placement?

- We will use the below model to predict the output.

- First, we need to pass these input points to the model, model2 and model3 respectively.

- Then we will calculate the log of odds for each model and finally add them together.

- The final log of odds will be converted to the probability value to make the prediction.

- Model 1 will always give 0.51 as its log of odds output.

- Passing the input value to Model 2 will result in the log of odds = -2.66.

- Passing the input value to Model 3 will result in the log of odds = 1.56.

- Model1 + Model2 + Model3 log of odds is = 0.51 + (-2.66) + 1.56 = -0.59

- Probability = 1/(1 + exp(-0.59)) = 0.35

- If we set a threshold value of 0.5 we can say it is below the threshold value.

- Hence the output will be 0 (No Placement).