Mean Of Error Term Should Be Zero

Table Of Contents:

- What Is Error Term In the Regression Model?

- Error Term Use In Formula.

- The Difference Between Error Terms and Residuals.

- Mean Of Error Term Should Be Zero.

(1) What Is Error Term In Regression Model?

An error term is a residual variable produced by a statistical or mathematical model, which is created when the model does not fully represent the actual relationship between the independent variables and the dependent variables.

As a result of this incomplete relationship, the error term is the amount at which the equation may differ during empirical analysis.

The error term is also known as the residual, disturbance, or remainder term, and is variously represented in models by the letters e, ε, or u.

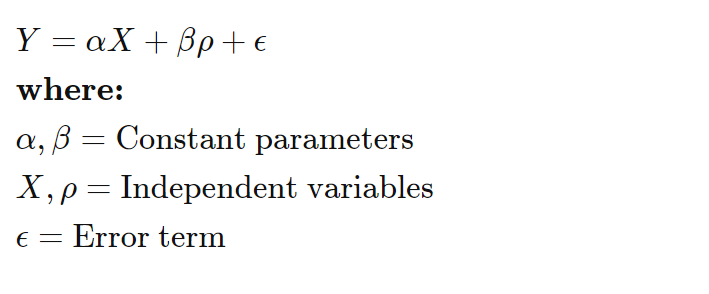

(2) Error Term Use In Formula.

- An error term essentially means that the model is not completely accurate and results in differing results during real-world applications.

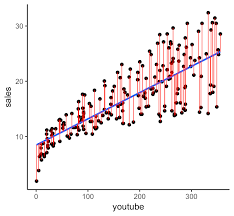

- For example, assume there is a multiple linear regression function that takes the following form:

- When the actual Y differs from the expected or predicted Y in the model during an empirical test, then the error term does not equal 0, which means there are other factors that influence Y.

(3) The Difference Between Error Terms and Residuals.

- Although the error term and residual are often used synonymously, there is an important formal difference.

- An error term is generally unobservable and a residual is observable and calculable, making it much easier to quantify and visualize.

- In effect, while an error term represents the way observed data differs from the actual population, a residual represents the way observed data differs from sample population data.

(4) Mean Of Error Term Should Be Zero.

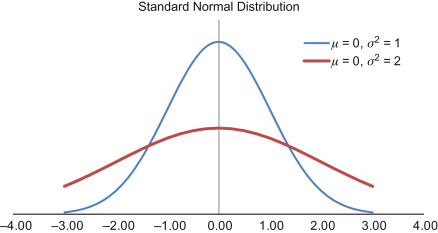

- For your model to be unbiased, the average value of the error term must equal zero.

- Well, the errors or disturbances are small (hopefully so!) random deviations from the estimated value that the model predicts.

- If the model prediction was correct to the last decimal, that would be strange! Therefore the deviations are quite normal.

- However, while disturbances are expected, we do not expect these disturbances to be always positive (that would mean that the model always underestimates the actual value) or always negative (that would mean that our model always overestimates the actual value).

- Because, y(actual) – y(pred) = Error

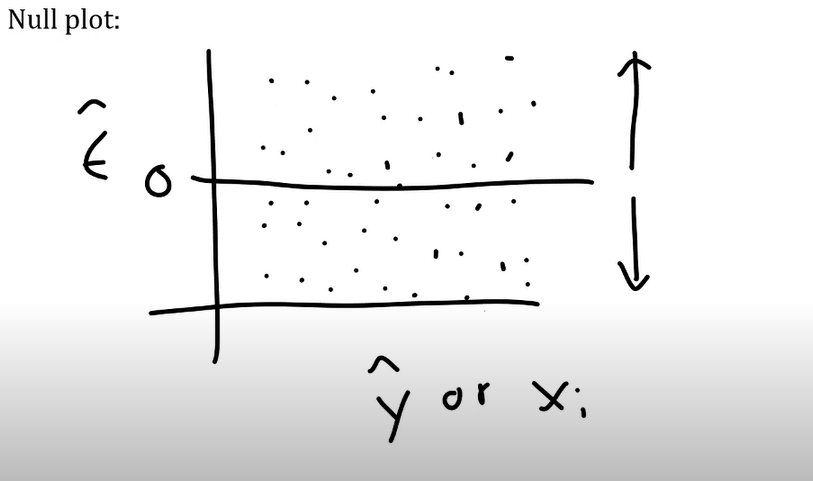

- Rather, the disturbances shouldn’t have any fixed pattern at all.

- In other words, we want our errors to be random — sometimes positive and sometimes negative.

- Roughly then, the sum of all these individual errors would cancel out and become equal to zero, thereby making the average (or expected value) of error terms equal to zero as well.

(5) Example Of Mean Not Zero.

Suppose the average error is +7. This non-zero average error indicates that our model systematically underpredicts the observed values.

Statisticians refer to systematic error like this as bias, and it signifies that our model is inadequate because it is not correct on average.

Stated another way, we want the expected value of the error to equal zero. If the expected value is +7 rather than zero, part of the error term is predictable, and we should add that information to the regression model itself. We want only random errors left for the error term.

You don’t need to worry about this assumption when you include the constant in your regression model because it forces the mean of the residuals to equal zero.

(6) Solution If Mean Is Not Zero.

- The constant term in the model will make the error term zero if it is not zero.

- y = α+ βx + 10

- Here the constant term is (α).

- For example, take an equation,

- y = 10+ βx + 3

- To make the error term here 3 to zero, we have to subtract and add 3 of the right side of the equation.

- y = 10+ βx + 3 + (3 – 3)

- Now the new equation will be,

- y = (10+3)+ βx + (3 – 3)

- y = (13)+ βx + (0)

- Here the error term has come to zero as the constant has taken care of it.

- When you are running your regression model with a constant term the error will automatically come to zero.

- However, the least squares algorithm forces the sum of the error values to be equal to zero.

- This will occur in any regression in which you have an intercept term.

(7) Why Mean Of Error Should Be Zero ?

In linear regression, the assumption that the mean of the error term (also known as the residual) is zero is a key assumption for the model. Here’s why the mean of the error term should be zero:

Model Specification: When the mean of the error term is assumed to be zero, it implies that, on average, the predicted values from the regression model are equal to the true values of the dependent variable. In other words, the model is correctly specified and captures the average relationship between the independent variables and the dependent variable.

Unbiasedness: The assumption of a zero mean error term is closely related to the unbiasedness of the regression coefficients. When the mean of the error term is zero, the estimated coefficients obtained from the regression analysis are unbiased estimators of the true population coefficients. Unbiasedness means that, on average, the estimated coefficients are equal to the true coefficients, even though they may vary from one sample to another.

Interpreting The Intercept: The intercept term in a linear regression model represents the expected value of the dependent variable when all the independent variables are zero. If the mean of the error term is zero, it ensures that the intercept term captures the true expected value of the dependent variable when the independent variables have zero impact. Without this assumption, the intercept term may not have a clear or meaningful interpretation.

Consistency Of Estimators: Assuming a zero mean error term is also important for the consistency of the estimated coefficients. Consistency means that as the sample size increases, the estimated coefficients converge to the true population coefficients. The assumption of a zero mean error term helps ensure that the estimated coefficients become increasingly accurate and converge to their true values as the sample size grows.

It’s worth noting that the assumption of a zero mean error term does not imply that the error term is identically zero for every observation. Rather, it means that the average of the error term over all observations is zero. This assumption allows for the correct specification, unbiasedness, and consistency of the regression model, facilitating valid inference and interpretation of the estimated coefficients.

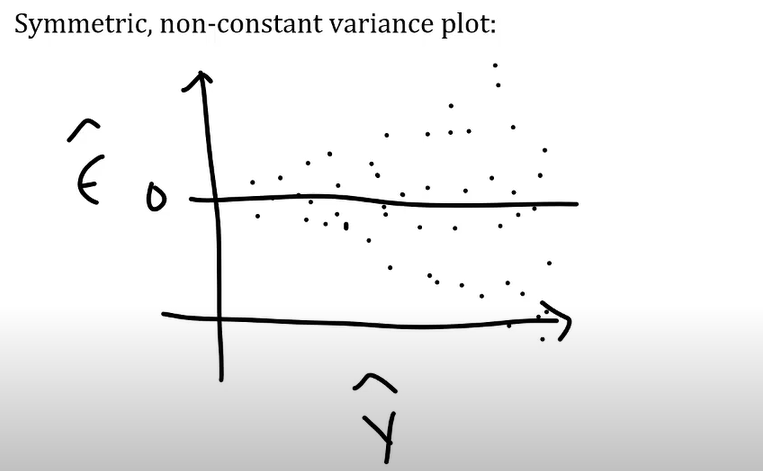

Zero Error Mean.

Zero Error Mean.

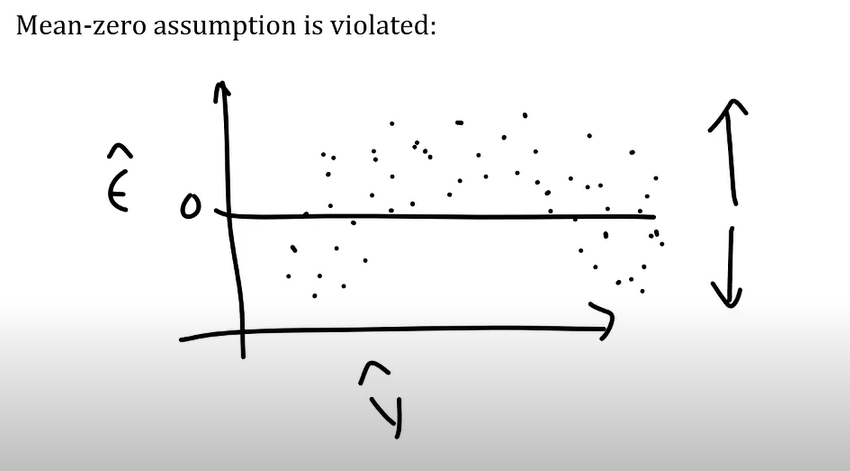

Non Zero Error Mean.

(8) How Constant Term Makes Error As Zero.

- A portion of the estimation process for the y-intercept is based on the exclusion of relevant variables from the regression model.

- When you leave relevant variables out, this can produce bias in the model. Bias exists if the residuals have an overall positive or negative mean.

- In other words, the model tends to make predictions that are systematically too high or too low.

- The constant term prevents this overall bias by forcing the residual mean to equal zero.

- Imagine that you can move the regression line up or down to the point where the residual mean equals zero.

- For example, if the regression produces residuals with a positive average, just move the line up until the mean equals zero.

- This process is how the constant ensures that the regression model satisfies the critical assumption that the residual average equals zero.

- However, this process does not focus on producing a y-intercept that is meaningful for your study area. Instead, it focuses entirely on providing that mean of zero.

- The constant ensures the residuals don’t have an overall bias, but that might make it meaningless.

Super Note:

- In Linear Regression equation the beta values represents the average values of the population parameter.

- The beta values are not quite zero hence it is represented in the equation.

- Like that the average value of the error distribution will also be represented in the Linear Regression equation.

- If error has Non-Zero average value it will also be represented in the equation.

- That means you are saying your model will always give an error of that average value.

- Hence we need to make the average of the error term to zero so that it will not be added in the equation.

- If you have constant term in your equation your error will be added to that and together it will come as a new constant with no error term.