Multi Horizon Forecasting

Table Of Contents:

- What Is Multi Horizon Forecasting?

(1) What Is Multi Horizon Forecasting?

- Multi-horizon forecasting in time series refers to predicting future values of a time series over multiple future time steps.

- Instead of making a single-step forecast, where you predict the next value in the time series, multi-horizon forecasting involves predicting several future values at once, typically for a predefined range or sequence of future time steps.

- For example, in a daily sales forecasting scenario, a single-step forecast would predict tomorrow’s sales based on today’s data.

- In contrast, a multi-horizon forecast might predict the sales for the next seven days, providing a forecast for each day in the upcoming week.

(2) Applications Of Multi Horizon Forecasting?

- Multi-horizon forecasting can be useful in various applications, such as demand forecasting, stock market prediction, energy load forecasting, and resource planning.

- It allows decision-makers to anticipate and plan for multiple future time points, providing insights into how the time series is expected to evolve over a specific time horizon.

(3) How To Perform Multi Horizon Forecasting?

- To perform multi-horizon forecasting, various time series forecasting methods can be employed, including autoregressive models (e.g., ARIMA), state space models, exponential smoothing models (e.g., Holt-Winters), and machine learning algorithms (e.g., recurrent neural networks – RNNs).

- These models can be adapted to generate forecasts for multiple time steps ahead by considering the dependencies and patterns in the historical data.

(4) Inputs For Multi Horizon Forecasting?

- Multi-horizon forecasting often contains a complex mix of inputs – including:

- Static (i.e. time-invariant) covariates,

- Known future inputs,

- and other exogenous time series that are only observed in the past – without any prior information on how they interact with the target.

(5) Challenges For Multi Horizon Forecasting?

- It’s important to note that multi-horizon forecasting can be more challenging than single-step forecasting, as errors tend to accumulate with each future time step. Additionally, the accuracy of multi-horizon forecasts may decrease as the forecast horizon increases, making it crucial to assess and understand the uncertainty associated with longer-term predictions.

(6) Evaluating Multi Horizon Forecasting?

Evaluating the performance of multi-horizon forecasts typically involves comparing the predicted values against the actual values for each future time step.

Metrics such as mean absolute error (MAE), mean squared error (MSE), or symmetric mean absolute percentage error (SMAPE) can be used to assess the accuracy of the forecasts across the entire forecast horizon.

Overall, multi-horizon forecasting provides a more comprehensive view of the future trajectory of a time series, enabling better decision-making and planning in various domains.

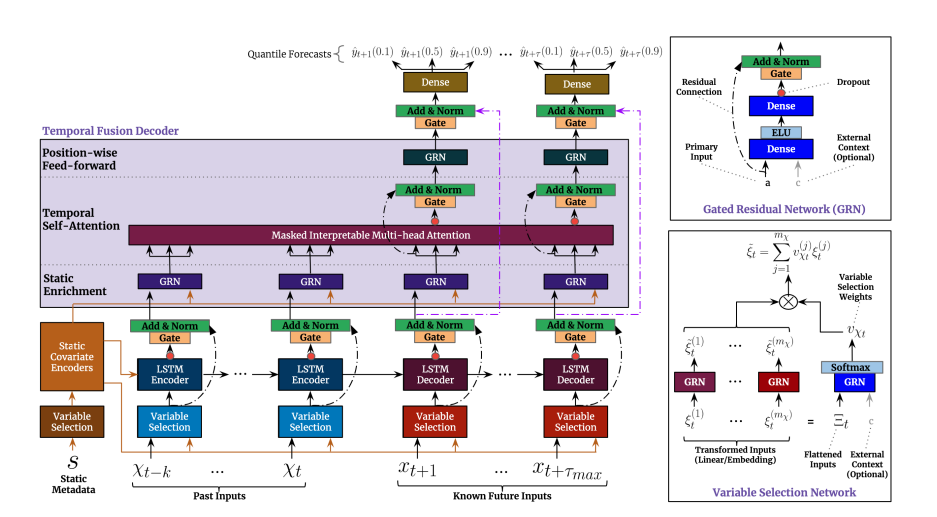

(7) Temporal Fusion Transformer.

- Temporal Fusion Transformer (TFT) is a deep learning model specifically designed for time series forecasting tasks.

- It combines the strengths of recurrent neural networks (RNNs) and transformers to capture both sequential dependencies and global patterns in time series data.

- TFT was introduced in a research paper titled “Temporal Fusion Transformers for Interpretable Multi-horizon Time Series Forecasting” by Bryan Lim, Sercan Ö. Arik, Nicolas Loeff, and Tomas Pfister.

- The key idea behind TFT is to leverage the self-attention mechanism of transformers to model the relationships between different time steps in a time series, while also incorporating the temporal dependencies through a recurrent structure.

- This allows TFT to effectively capture both local and global patterns, making it suitable for multi-horizon forecasting.

(8) Key Features Of Temporal Fusion Transformer.

Encoder-Decoder Architecture: TFT follows an encoder-decoder architecture. The encoder processes the historical values of the time series, capturing the dependencies and patterns, while the decoder predicts the future values.

Temporal Fusion Attention: TFT introduces a novel attention mechanism called Temporal Fusion Attention (TFA). TFA combines the self-attention mechanism of transformers with a gating mechanism to selectively attend to relevant time steps and features. This attention mechanism helps the model capture long-range dependencies and identify important temporal patterns.

Autoregressive Hidden State: TFT incorporates an autoregressive hidden state in the decoder, allowing it to utilize the previously generated predictions as inputs for predicting future time steps. This autoregressive structure helps capture the temporal dependencies and improves the accuracy of multi-horizon forecasts.

Interpretable Feature Embeddings: TFT includes interpretable feature embeddings, enabling the model to capture various features and patterns in the time series data. These embeddings provide a way to understand the importance and impact of different features on the forecasts.

Global Context Variables: TFT allows the inclusion of global context variables, which are external factors that may influence the time series. These variables can be incorporated into the model to capture their impact on the forecasts and improve the accuracy of predictions.

(9) Key Features Of Temporal Fusion Transformer.

One notable advantage of TFT is its interpretability. The model provides interpretable feature embeddings, which can be used to understand the importance of different features in the predictions. Additionally, the gating mechanism in TFA allows for identifying the relevant time steps and features, providing insights into the patterns captured by the model.

TFT has shown promising results in various time series forecasting tasks, including energy load forecasting, retail sales forecasting, and traffic prediction. It offers an effective and interpretable approach for capturing complex temporal patterns and making accurate multi-horizon predictions.

(10) Components Of Temporal Fusion Transformer.

- Temporal Fusion Transformer (TFT) – an attention-based DNN architecture for multi-horizon forecasting that achieves high performance while enabling new forms of interpretability.

- It Contains:

- (1) static covariate encoders which encode context vectors for use in other parts of the network,

- (2) gating mechanisms throughout and sample-dependent variable selection to minimize the contributions of irrelevant inputs,

- (3) a sequence-to-sequence layer to locally process known and observed inputs, and

- (4) a temporal self-attention decoder to learn any long-term dependencies present within the dataset.