Recurrent Neural Networks

Table Of Contents:

- What Is Recurrent Neural Networks?

(1) What Is Recurrent Neural Networks?

- Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to process sequential and time-dependent data.

- They are particularly effective in tasks involving sequential data, such as natural language processing, speech recognition, time series analysis, and handwriting recognition.

- The key feature of RNNs is their ability to maintain a hidden state that captures information from previous time steps and propagates it to future steps.

- This recurrent connectivity allows RNNs to capture temporal dependencies and patterns in the data.

(2) Components Of Recurrent Neural Networks?

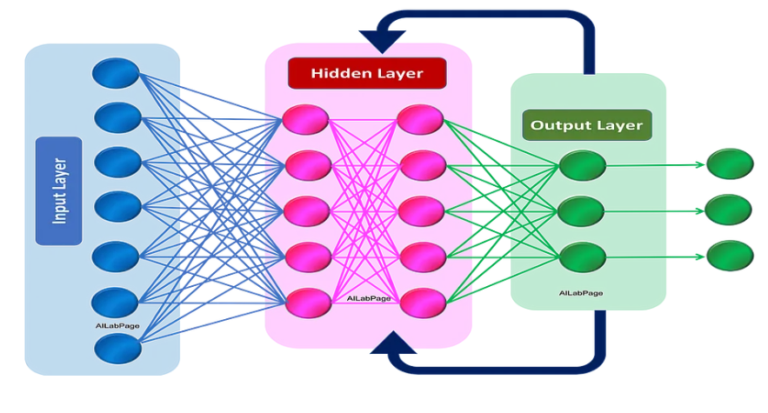

Recurrent Connections: RNNs have recurrent connections that allow information to flow from previous time steps to the current time step. This recurrent structure enables the network to maintain a memory of past information and incorporate it into the current computation.

Hidden State: RNNs maintain a hidden state, also known as the memory or context vector, which represents the network’s memory of past information. The hidden state is updated at each time step, incorporating the current input and the previous hidden state.

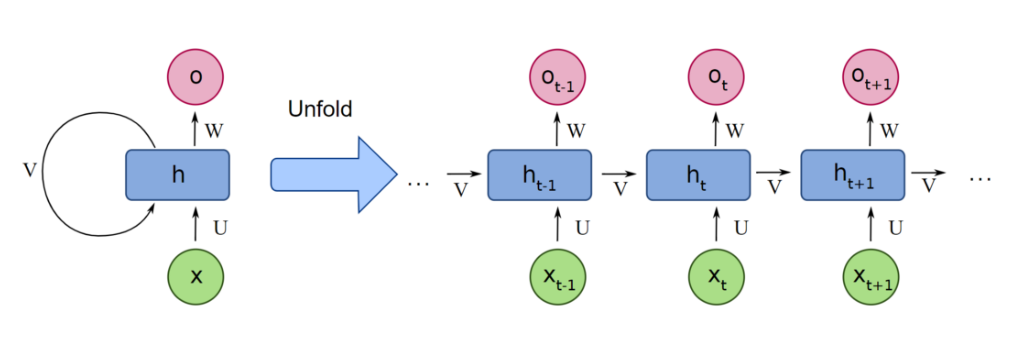

Time Unfolding: To process sequential data, RNNs are “unfolded” over time, creating a chain of interconnected network instances, one for each time step. This unfolding enables the network to process the input sequence step by step and capture the dependencies between different time steps.

Training with Backpropagation Through Time (BPTT): RNNs are typically trained using the Backpropagation Through Time (BPTT) algorithm. BPTT adapts the weights of the network by backpropagating the error gradients through the unfolded network in time.

Vanishing and Exploding Gradients: RNNs can suffer from the vanishing or exploding gradient problem. The vanishing gradient problem occurs when the gradients diminish as they propagate through many time steps, making it difficult for the network to learn long-term dependencies. Conversely, the exploding gradient problem happens when the gradients grow exponentially, leading to unstable training. Techniques like gradient clipping, gating mechanisms (e.g., LSTM and GRU), and skip connections (e.g., residual connections) are often employed to mitigate these issues.

Variants of RNNs: Various variants of RNNs have been developed to address the limitations of the basic RNN architecture. Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) are two popular types of RNN architectures that incorporate gating mechanisms to improve gradient flow and capture long-term dependencies.

(3) Components Of Recurrent Neural Networks?

- RNNs excel in tasks that involve sequential or time-dependent data because they can capture the context and temporal dependencies in the data.

- However, they have limitations in modeling very long sequences due to the vanishing and exploding gradient problems.

- More recent architectures, such as transformers and their variants, have gained popularity for tasks involving long-range dependencies and parallel processing.