Feedforward Neural Network

Table Of Contents:

- What Is Feedforward Neural Network?

- Why Are Neural Networks Used?

- Neural Network Architecture And Operation.

- Components Of Feedforward Neural Network.

- How Feedforward Neural Network Works?

- Why Does This Strategy Works?

- Importance Of Non-Linearity.

- Applications Of Feed Forward Neural Network.

(1) What Is Feedforward Neural Network?

- The feed-forward model is the simplest type of neural network because the input is only processed in one direction. The data always flows in one direction and never backwards, regardless of how many buried nodes it passes through.

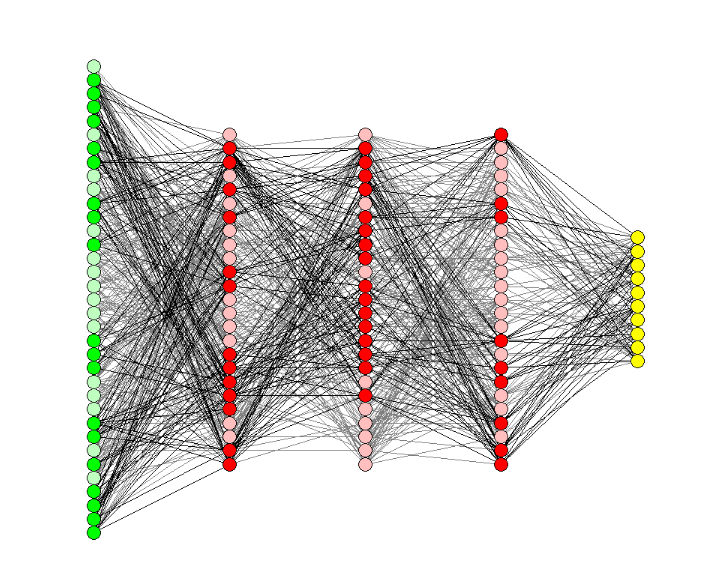

- A feedforward neural network, also known as a multilayer perceptron (MLP), is a type of artificial neural network that consists of multiple layers of interconnected nodes (neurons) organized in a directed acyclic graph.

- It is called “feedforward” because the information flows in one direction, from the input layer through the hidden layers to the output layer, without any feedback connections.

(2) Why Are Neural Networks Used?

- Neural Networks are a type of function that connects inputs with outputs. In theory, neural networks should be able to estimate any sort of function, no matter how complex it is.

- Nonetheless, supervised learning entails learning a function that translates a given X to a specified Y and then utilising that function to determine the proper Y for a fresh X.

- If that’s the case, how do neural networks differ from typical machine learning methods? Inductive Bias, a psychological phenomenon, is the answer.

- The phrase may appear to be fresh. However, before applying a machine learning model to it, it is nothing more than our assumptions about the relationship between X and Y.

- The linear relationship between X and Y is the Inductive Bias of linear regression. As a result, it fits the data to a line or a hyperplane.

- When there is a non-linear and complex relationship between X and Y, nevertheless, a Linear Regression method may struggle to predict Y. To approximate that relationship, we may need a curve or a multi-dimensional curve in this scenario.

- However, depending on the function’s complexity, we may need to manually set the number of neurons in each layer and the total number of layers in the network. This is usually accomplished through trial and error methods as well as experience. As a result, these parameters are referred to as hyperparameters.

(3) Neural Network Architecture & Operation.

- Before we look at why neural networks work, it’s important to understand what neural networks do. Before we can grasp the design of a neural network, we must first understand what a neuron performs.

- A weight is assigned to each input to an artificial neuron. First, the inputs are multiplied by their weights, and then a bias is applied to the outcome. After that, the weighted sum is passed via an activation function, being a non-linear function.

- The first layer is the input layer, which appears to have six neurons but is only the data that is sent into the neural network. The output layer is the final layer. The dataset and the type of challenge determine the number of neurons in the final layer and the first layer. Trial and error will be used to determine the number of neurons in the hidden layers and the number of hidden layers.

- All of the inputs from the previous layer will be connected to the first neuron from the first hidden layer. The second neuron in the first hidden layer will be connected to all of the preceding layer’s inputs, and so forth for all of the first hidden layer’s neurons. The outputs of the previously hidden layer are regarded as inputs for neurons in the second hidden layer, and each of these neurons is coupled to all of the preceding neurons.

(4) What Is Feed Forward Neural Network & How Does It Work?

- In its most basic form, a Feed-Forward Neural Network is a single-layer perceptron. A sequence of inputs enter the layer and are multiplied by the weights in this model. The weighted input values are then summed together to form a total.

- If the sum of the values is more than a predetermined threshold, which is normally set at zero, the output value is usually 1, and if the sum is less than the threshold, the output value is usually -1.

- The single-layer perceptron is a popular feed-forward neural network model that is frequently used for classification. Single-layer perceptrons can also contain machine-learning features.

- The neural network can compare the outputs of its nodes with the desired values using a property known as the delta rule, allowing the network to alter its weights through training to create more accurate output values. This training and learning procedure results in gradient descent.

- The technique of updating weights in multi-layered perceptrons is virtually the same, however, the process is referred to as back-propagation. In such circumstances, the output values provided by the final layer are used to alter each hidden layer inside the network.

(5) Components Of Feed Forward Neural Network.

Input Layer: The input layer is where the network receives the raw input data. Each node in the input layer represents a feature or attribute of the input data.

Hidden Layers: The hidden layers are intermediate layers between the input and output layers. They are responsible for extracting and transforming the input data into a more useful representation through a series of weighted computations. A feedforward network can have one or more hidden layers, and the number of nodes in each layer can vary.

Activation Functions: Each node in the hidden and output layers applies an activation function to the weighted sum of its inputs. Common activation functions include sigmoid, tanh, ReLU, and softmax. Activation functions introduce non-linearity to the network, allowing it to model complex relationships between inputs and outputs.

Weights and Biases: The connections between nodes in the network are assigned weights, which determine the strength of the connections. Additionally, each node (except the input nodes) has an associated bias term, which helps in adjusting the output of the node. The weights and biases are learned during the training process.

Output Layer: The output layer produces the final predictions or outputs of the network. The number of nodes in the output layer depends on the task being performed. For example, in binary classification, there is usually one output node representing the probability or confidence of belonging to one class, while in multi-class classification, there is typically one output node per class, often using the softmax activation function.

Forward Propagation: During the forward propagation phase, the input data is fed into the network, and the computations flow through the layers from the input to the output. Each layer computes its outputs based on the inputs received from the previous layer, applying the activation function and using the learned weights and biases.

Training: The training of a feedforward neural network involves adjusting the weights and biases to minimize a specific loss or error function. This is typically done using optimization algorithms such as gradient descent and backpropagation, which calculate the gradients of the loss function with respect to the network parameters and update them iteratively.

- Feedforward neural networks are widely used in various applications, including image and speech recognition, natural language processing, and regression problems.

- While they are effective at modelling complex relationships, they may face challenges with large-scale and high-dimensional datasets or tasks that require handling sequential or time-dependent data, for which other types of networks like recurrent neural networks (RNNs) or convolutional neural networks (CNNs) are more suitable.

(6) How Does This Strategy Works?

- As we’ve seen, the function of each neurone in the network is similar to that of linear regression. The neuron also has an activation function at the end, and each neuron has its weight vector.

- Neural networks should theoretically be able to estimate any continuous function, no matter how complex or non-linear it is.

(7) Importance Of Non Linearity.

- When two or more linear objects, such as a line, plane, or hyperplane, are combined, the outcome is also a linear object: line, plane, or hyperplane. No matter how many of these linear things we add, we’ll still end up with a linear object.

- However, this is not the case when adding non-linear objects. When two separate curves are combined, the result is likely to be a more complex curve.

- We’re introducing non-linearity at every layer using these activation functions, in addition to just adding non-linear objects or hyper-curves like hyperplanes. In other words, we’re applying a nonlinear function on an already nonlinear object.

What if activation functions were not used in neural networks?

- Suppose if neural networks didn’t have an activation function, they’d just be a huge linear unit that a single linear regression model could easily replace.

(8) Applications Of Feed Forward Neural Networks.

- Feedforward neural networks, or multilayer perceptrons (MLPs), have a wide range of applications across various domains. Here are some common applications of feedforward neural networks:

Classification: Feedforward neural networks are commonly used for classification tasks, where the goal is to assign input data to specific classes or categories. They have been successfully applied in areas such as image classification, text classification, sentiment analysis, and medical diagnosis.

Regression: Feedforward neural networks can be used for regression tasks, where the goal is to predict a continuous output value based on input data. They have been used for applications such as predicting house prices, stock market analysis, demand forecasting, and time series analysis.

Pattern Recognition: Feedforward neural networks are effective in pattern recognition tasks, where they can learn to identify and classify patterns in data. They have been employed in areas such as handwriting recognition, speech recognition, face recognition, and object detection.

Natural Language Processing (NLP): Feedforward neural networks have been utilized in various NLP applications, including text generation, sentiment analysis, named entity recognition, part-of-speech tagging, machine translation, and text summarization.

Anomaly Detection: Feedforward neural networks can be used for anomaly detection tasks, where they learn to identify unusual or abnormal patterns in data. They have been applied in fraud detection, network intrusion detection, fault diagnosis, and outlier detection in various domains.

Recommender Systems: Feedforward neural networks can be used to build recommendation systems that provide personalized recommendations to users based on their preferences and behaviors. They have been used in applications such as movie recommendations, product recommendations, and content recommendations.

Financial Forecasting: Feedforward neural networks have been employed in financial forecasting tasks, including stock market prediction, exchange rate prediction, and financial risk assessment.

Image and Signal Processing: Feedforward neural networks are used extensively in image and signal processing tasks. They have been applied in image recognition, object detection, image segmentation, speech recognition, audio processing, and audio classification.

Bioinformatics: Feedforward neural networks have been employed in bioinformatics for tasks such as protein structure prediction, gene expression analysis, DNA sequence classification, and drug discovery.

- These are just a few examples of the many applications of feedforward neural networks. Their ability to learn complex patterns and relationships in data makes them versatile and applicable to a wide range of problems across various domains.