How Does Neural Networks Works?

Table Of Contents:

- How Does Neural Networks Work?

- Input Data.

- Feedforward.

- Activation Functions.

- Output Layer.

- Loss Function.

- Backpropagation and Training.

- Training Iterations.

- Prediction and Inference.

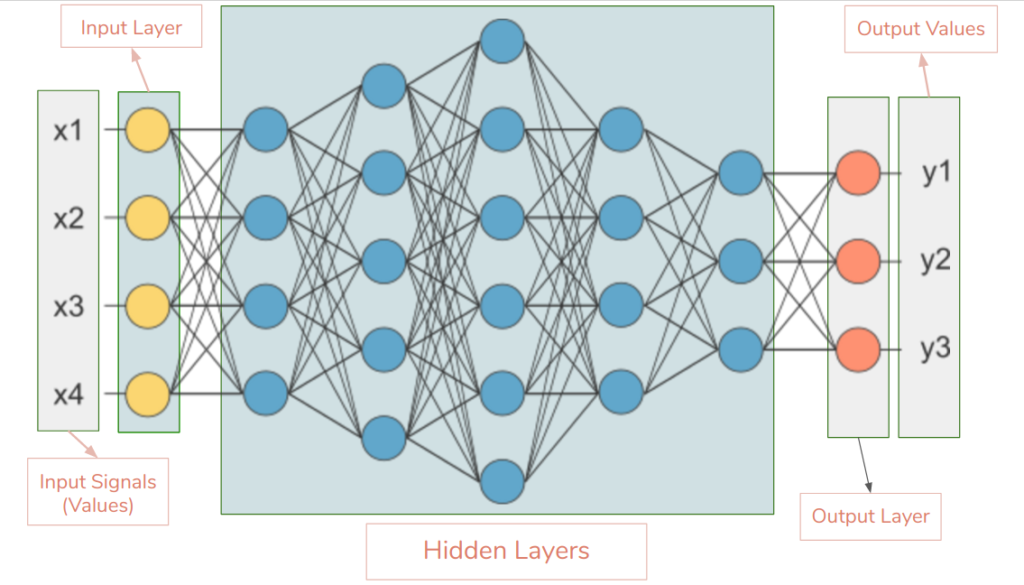

(1) How Does Neural Networks Work?

- Neural networks work by processing input data through a network of interconnected artificial neurons, also known as nodes or units.

- The network learns from the input data and adjusts the connections (weights) between neurons to make accurate predictions or perform specific tasks.

- Here’s a general overview of how neural networks work:

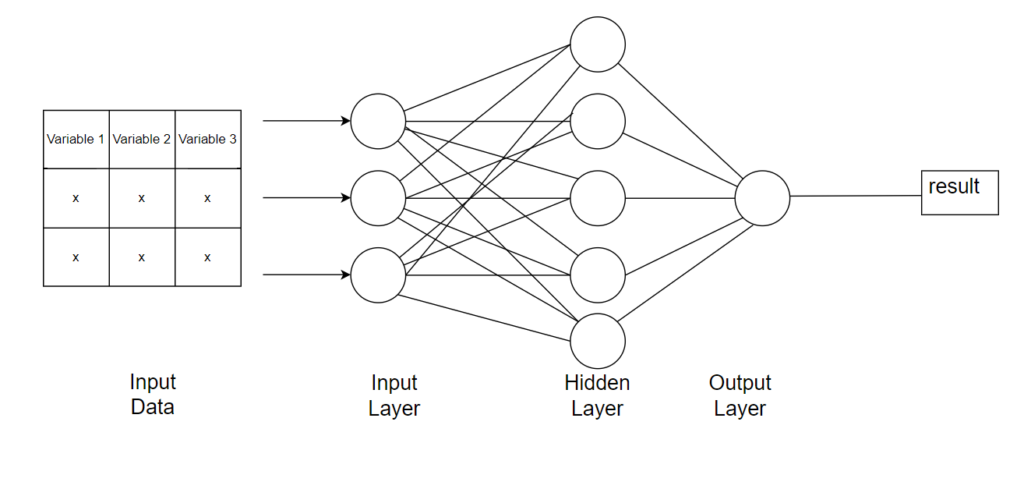

(2) Input Data.

- The neural network receives input data, which can be in the form of numerical values, images, text, or any other suitable representation.

- The input data is usually preprocessed and normalized to ensure compatibility with the network.

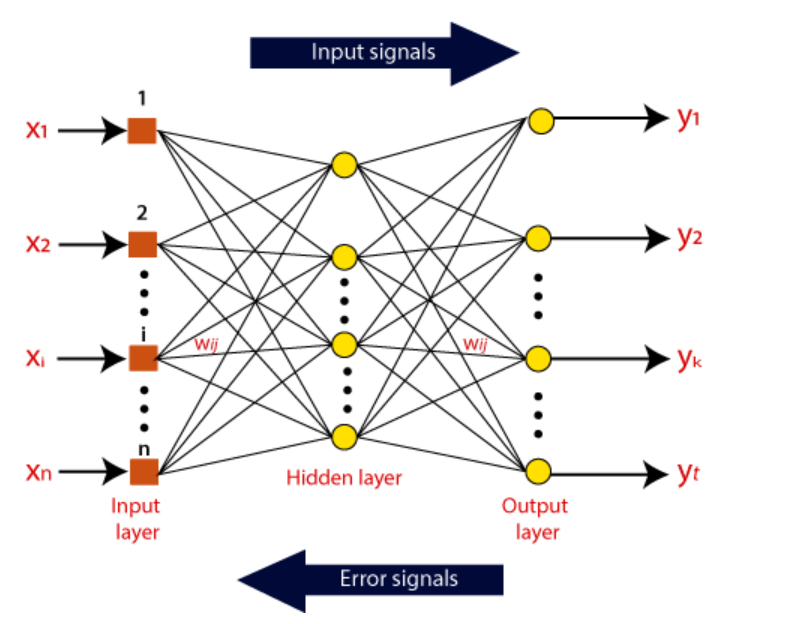

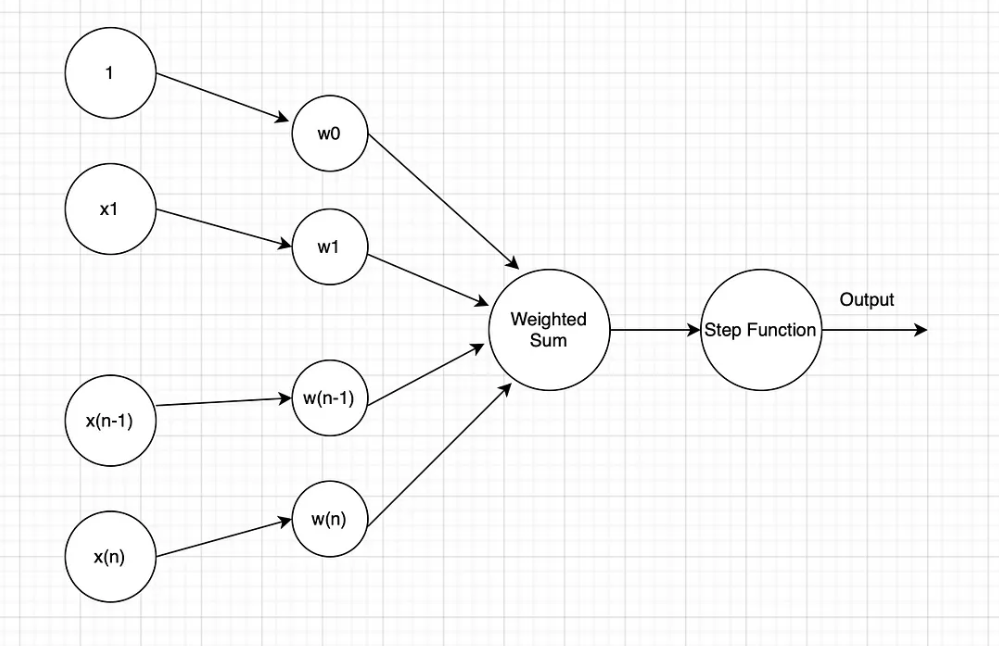

(3) Feedforward.

- The input data is passed through the network in a forward direction, layer by layer.

- Each neuron in a layer receives inputs from the previous layer, applies an activation function to the weighted sum of the inputs, and produces an output.

- The outputs of the neurons in one layer become the inputs to the neurons in the next layer.

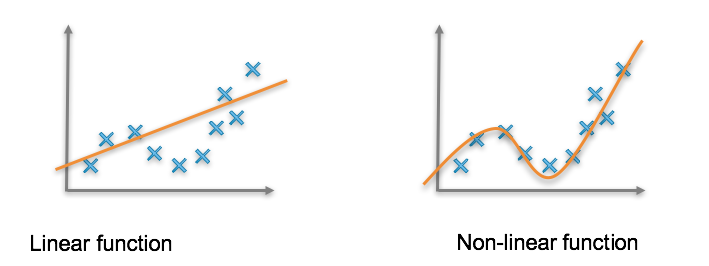

(4) Activation Functions.

- Activation functions introduce non-linearity into the network, enabling it to learn complex patterns and make non-linear decisions.

- Each neuron applies an activation function to the weighted sum of its inputs, which determines its output value.

- Common activation functions include sigmoid, tanh, ReLU (Rectified Linear Unit), and softmax.

(5) Output Layer.

- The final layer in the network is the output layer, which produces the network’s prediction or output based on the processed input data.

- The number of neurons in the output layer depends on the type of task the neural network is designed for.

- For example, in a classification task, the output layer may have neurons representing different classes, and the neuron with the highest output value indicates the predicted class.

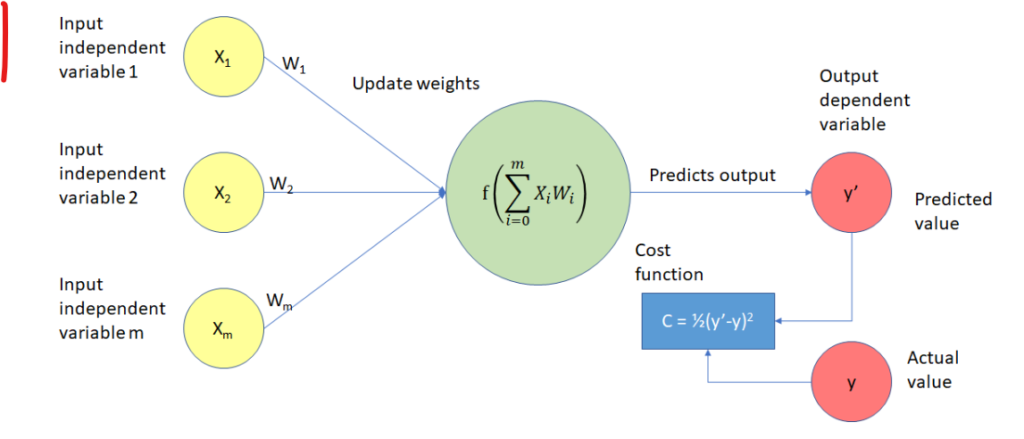

(6) Loss Function

- A loss function measures the mismatch between the network’s predictions and the desired output.

- The choice of the loss function depends on the specific task, such as mean squared error for regression or cross-entropy for classification.

- The goal is to minimize the loss function by adjusting the network’s parameters.

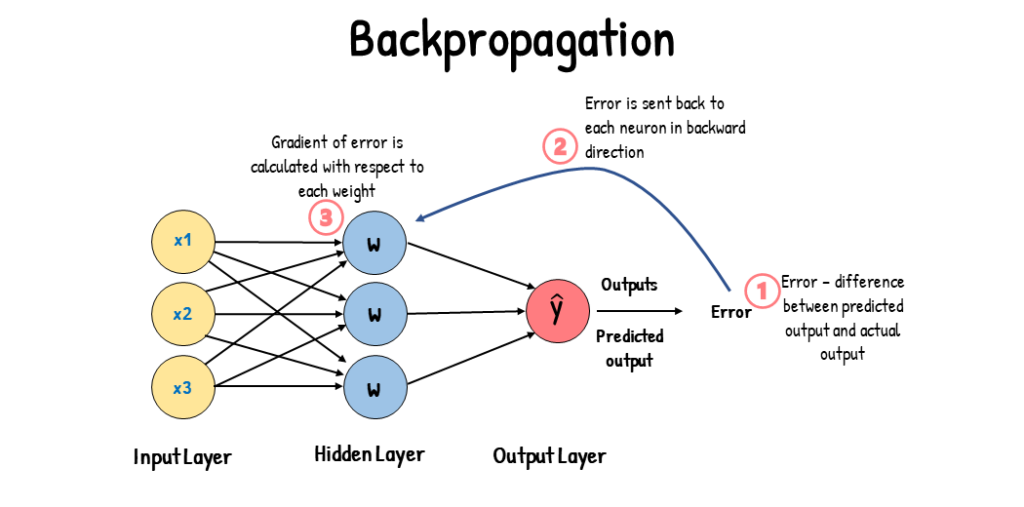

(7) Backpropagation and Training.

- Backpropagation is a learning algorithm used to train neural networks by adjusting the weights and biases based on the prediction error.

- It involves computing the gradient of the loss function with respect to the network’s parameters and updating them using optimization techniques like gradient descent.

- The gradients are propagated backward through the network, allowing the network to learn from the error and adjust the weights accordingly.

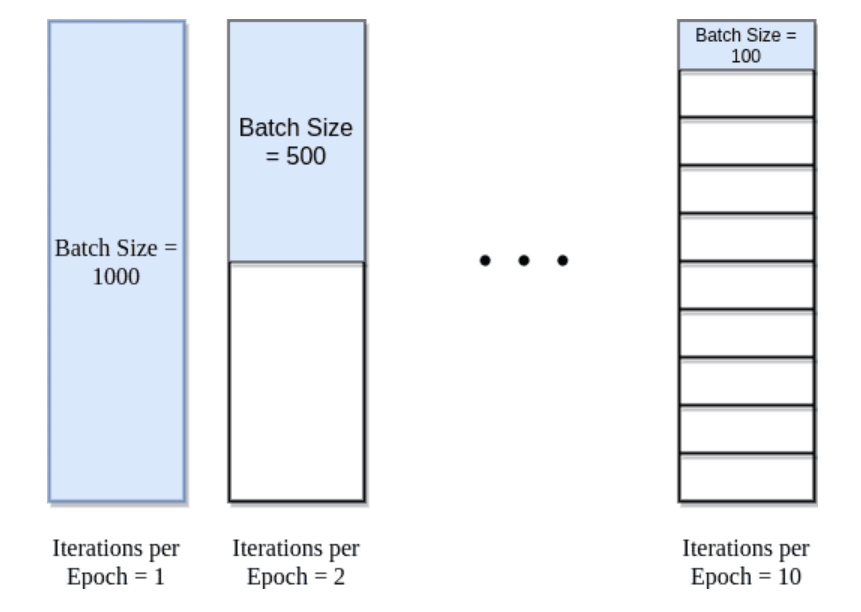

(8) Training Iterations.

- The training process involves iterating over the training data multiple times, also known as epochs.

- In each epoch, the network processes batches or individual instances of the training data computes the loss and updates the weights using backpropagation.

- The iterative training process continues until the network’s performance converges or reaches a satisfactory level.

(9) Prediction and Inference.

- Once the neural network is trained, it can be used to make predictions or perform tasks on new, unseen data.

- The input data is fed into the trained network, and the output layer produces the predicted values or class probabilities based on the learned patterns and relationships.