Hyperbolic Tangent (tanh) Activation.

Table Of Contents:

- What Is Hyperbolic Tangent (tanh) Activation?

- Formula & Diagram For Hyperbolic Tangent (tanh) Activation.

- Where To Use Hyperbolic Tangent (tanh) Activation?

- Advantages & Disadvantages Of Hyperbolic Tangent (tanh) Activation.

(1) What Is Hyperbolic Tangent (tanh) Activation?

- Maps the input to a value between -1 and 1.

- Similar to the sigmoid function but centred around zero.

- Commonly used in hidden layers of neural networks.

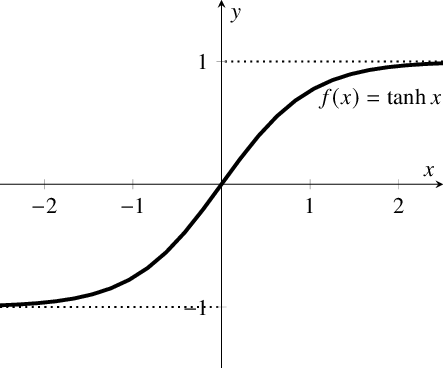

(2) Formula & Diagram For Hyperbolic Tangent (tanh) Activation

Formula:

Diagram:

(3) Where To Use Hyperbolic Tangent (tanh) Activation?

Hidden Layers of Neural Networks: The tanh activation function is often used in hidden layers of neural networks. It provides stronger non-linearity compared to sigmoid activations, allowing the network to capture and model more complex relationships in the data. The symmetric nature of the tanh function, with its output range between -1 and 1, helps in balancing the network’s representations and avoiding biases towards positive or negative values.

Recurrent Neural Networks (RNNs): Tanh activations are commonly used in the hidden states of recurrent neural networks. RNNs are effective in modeling sequential and temporal data, and the tanh activation helps capture and propagate contextual information over time. The symmetric nature of tanh aids in preserving the sign of the information flow through the recurrent connections.

Gradient Flow and Training Stability: The tanh activation function has steeper gradients compared to the sigmoid function, especially around the origin. This property can accelerate convergence during training, allowing the network to learn more quickly. The stronger gradients can help mitigate the vanishing gradient problem when training deep neural networks, making the tanh activation a suitable choice in such scenarios.

Image Processing: In certain image processing tasks, the tanh activation can be applied to the output layer of a neural network to normalize and scale pixel values between -1 and 1. This can help in tasks such as image denoising or image generation, where the network needs to produce pixel values that fall within a specific range.

GANs (Generative Adversarial Networks): Tanh activations are commonly used in the output layer of the generator network in GANs. GANs are used for generating realistic synthetic data, such as images or text. The tanh activation helps map the generator’s output to a range that is suitable for the desired data type, such as pixel intensities in the range of 0 to 255 for images.

(4) Advantages & Disadvantages Of Hyperbolic Tangent (tanh) Activation?

Advantages Of Hyperbolic Tangent (tanh) Activation.

Output Range: The tanh function maps the input to a range between -1 and 1. This output range allows the activation function to model both positive and negative values, capturing a broader range of information compared to the sigmoid function (which maps to the range of 0 to 1). This property can be useful for tasks where negative values are meaningful, such as sentiment analysis or audio processing.

Non-Linearity and Smoothness: The tanh function provides non-linearity to neural networks. It is a smooth and continuous function, enabling gradient-based optimization algorithms to efficiently update weights during training. The smoothness of the tanh function ensures smooth transitions between positive and negative values, allowing the network to capture complex relationships in the data.

Symmetry: The tanh function is symmetric around the origin (0), meaning that it has equal positive and negative slopes. This symmetry helps in balancing the network’s representations and reducing potential biases towards positive or negative values. It can be beneficial for achieving a more balanced and centered representation of the data.

Differentiability: The tanh function is differentiable everywhere, making it suitable for gradient-based optimization algorithms like backpropagation. The availability of derivatives facilitates efficient computation of gradients during backpropagation, enabling the network to learn from training data.

Disadvantages Of Hyperbolic Tangent (tanh) Activation.

Vanishing Gradient Problem: Similar to the sigmoid function, the tanh function also suffers from the vanishing gradient problem. The gradients can become extremely small for inputs far from zero, making it challenging for deep neural networks to learn meaningful representations in the early layers. This issue can hinder the training of deep architectures and impact their ability to capture complex patterns.

Saturation and Output Range: The tanh function saturates at extreme values, where the output is close to -1 or 1. In these saturated regions, the gradients become very small, leading to slow learning or halting of weight updates. The saturation can be problematic when the network needs to make precise distinctions or when the data distribution has outliers.

Limited Output Sensitivity: The tanh function is less sensitive to changes around the extremes (-1 and 1). Small changes in inputs in these saturated regions may not produce significant changes in the output, limiting the network’s ability to make precise distinctions in those regions.