Introduction To NLP

Table Of Contents:

- What Is Natural Language?

- Features OF Natural Language.

- Natural Language Vs. Programming Language.

- What Is Natural Language Processing?

- Fundamental Task NLP Perform:

- Common NLP Tasks:

- Techniques and Approaches:

- Applications Of NLP.

- Models Used In NLP.

- Specific Models In NLP.

(1) What Is Natural Language?

- Natural Language refers to the language that humans use to communicate with each other, including spoken and written forms.

- It encompasses the complex and nuanced ways in which humans express themselves, including grammar, syntax, semantics, pragmatics, and phonology.

- Natural Language is characterized by its variability, ambiguity, and context dependency, making it a challenging task for computers to understand and process.

Spoken Language:

- Casual conversations

- Dialogues

- Speeches

- Interviews

- Lectures

Written Language:

- Books

- Articles

- Emails

- Social media posts

- Text messages

- Formal documents

- Natural language is characterized by its complexity, ambiguity, and context dependence, which can pose challenges for computational processing and understanding.

(2) Features OF Natural Language.

Flexibility and Variability:

- Natural language allows for multiple ways to express the same meaning or intent.

- It can be influenced by factors like regional dialects, social contexts, and individual styles.

Ambiguity and Context-Dependence:

- The same words or phrases can have multiple interpretations depending on the context.

- Understanding natural language often requires considering the broader context, including the speaker, the situation, and shared knowledge.

Implicit Meaning and Inference:

- Natural language communication often involves conveying meaning beyond the literal words used.

- Humans can make inferences and understand implicit meaning based on their knowledge and experience.

Creativity and Dynamism:

- Natural language allows for the creative expression of new ideas, concepts, and metaphors.

- It evolves over time, with the introduction of new vocabulary, idioms, and linguistic patterns.

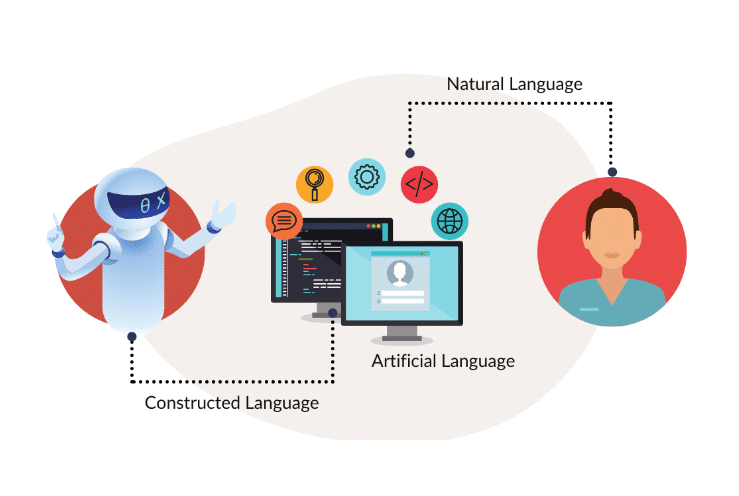

(3) Natural Language Vs. Programming Language.

- Natural Languages are the languages humans use to communicate with each other, while programming languages are the formal, unambiguous languages used to write software and give instructions to computers.

- Natural languages are more flexible and expressive, while programming languages are more rigid and precise to enable effective translation to machine-executable code.

- Even if we trace it back to its roots, it would be virtually impossible to find a single person who invented the language. Natural languages do not have a single origin.

Natural Language:

- Used for human-to-human communication.

- Ambiguous, flexible, and context-dependent.

- Allows for expression of complex ideas, emotions, and nuances

- Evolves organically over time.

Programming Language:

- Used for human-to-computer communication

- Precisely defined, unambiguous syntax and semantics

- Designed for giving specific, unambiguous instructions to computers

- Relatively static, with new languages and versions introduced over time

(4) What Is Natural Language Processing?

- NLP is a field of study that focuses on the interaction between human language and computers.

- It involves the development of algorithms and techniques that enable computers to understand, interpret, and generate human language.

(5) Fundamental Task NLP Perform:

- Phonetics and Phonology: The study of the sounds of language and how they are organized.

- Morphology: The study of the structure of words and how they are formed.

- Syntax: The study of the grammatical structure of sentences.

- Semantics: The study of the meaning of words and sentences.

- Pragmatics: The study of how language is used in context.

(6) Common NLP Tasks:

- Text Classification: Categorizing text into predefined classes or topics.

- Named Entity Recognition: Identifying and extracting named entities (e.g., persons, organizations, locations) from text.

- Sentiment Analysis: Determining the sentiment (positive, negative, or neutral) expressed in text.

- Machine Translation: Translating text from one language to another.

- Question Answering: Automatically answering questions based on given text or knowledge sources.

- Dialogue Systems: Developing conversational agents that can engage in natural language interactions.

(7) Techniques and Approaches:

- Rule-based Approaches: Using predefined rules and patterns to process language.

- Statistical Approaches: Leveraging machine learning algorithms to learn from data and make predictions.

- Deep Learning Approaches: Utilizing neural networks to learn complex representations of language.

- Probabilistic Approaches: Modeling language using probability distributions.

(8) Applications Of NLP:

Text Processing and Analysis:

- Sentiment Analysis: Determining the sentiment (positive, negative, neutral) expressed in text.

- Topic Modelling: Identifying the main themes and topics discussed in a corpus of text.

- Text Summarization: Generating concise summaries of longer text documents.

- Named Entity Recognition: Identifying and extracting important entities (people, organizations, locations, etc.) from the text.

Language Translation and Generation:

- Machine Translation: Translating text from one language to another.

- Chatbots and Virtual Assistants: Generating human-like responses in conversational interactions.

- Automatic Text Generation: Creating original text for tasks like article writing, content generation, etc.

Information Extraction and Retrieval:

- Question Answering: Providing answers to natural language questions.

- Information Extraction: Extracting structured data from unstructured text.

- Document Retrieval: Searching and retrieving relevant documents based on user queries.

Speech Recognition and Processing:

- Speech-to-Text Transcription: Converting spoken language to written text.

- Voice Command and Control: Enabling voice-based interactions with devices and systems.

- Audio Analysis: Extracting insights and metadata from audio recordings.

Intelligent Automation and Decision Support:

- Automated Text Processing: Streamlining tasks like document classification, routing, and workflow management.

- Predictive Analytics: Generating insights and recommendations based on textual data.

- Risk and Compliance Monitoring: Identifying potential risks or violations in textual data.

Personalization and Recommendation Systems:

- Content Recommendation: Suggesting relevant content (articles, products, etc.) based on user preferences.

- Personalized Assistants: Providing tailored recommendations and support based on user’s needs and context.

Biomedical and Healthcare Applications:

- Clinical Decision Support: Extracting insights from medical records and literature to aid clinical decision-making.

- Pharmacovigilance: Monitoring and analyzing drug-related adverse events from textual sources.

- Biomedical Research: Accelerating scientific discovery through text mining and knowledge extraction.

(10) Models Used In NLP.

Transformer-based Models:

- Examples: BERT, GPT, T5, RoBERTa, XLNet, ALBERT, etc.

- Utilize the Transformer architecture, which leverages attention mechanisms to capture contextual relationships in text.

- Perform well on a wide range of NLP tasks, such as text classification, question answering, text generation, and named entity recognition.

- Can be fine-tuned on specific tasks or datasets to achieve state-of-the-art performance.

Recurrent Neural Network (RNN) Models:

- Examples: LSTM, GRU, BiLSTM, etc.

- Leverage the sequential nature of text data, processing it one token at a time.

- Effective in tasks like language modeling, machine translation, and text generation.

- Suffer from issues like vanishing/exploding gradients and limited long-term dependency modelling.

Convolutional Neural Network (CNN) Models:

- Examples: TextCNN, CharCNN, etc.

- Utilize convolutional layers to capture local patterns and features in text data.

- Perform well on tasks like text classification, sentiment analysis, and sentence modeling.

- Effective in capturing local contextual information but may struggle with long-range dependencies.

Hybrid Models:

- Combine different neural network architectures (e.g., Transformer + RNN, Transformer + CNN) to leverage the strengths of multiple approaches.

- Designed to capture both local and global features in text data, leading to improved performance on various NLP tasks.

Unsupervised and Self-Supervised Models:

- Examples: Word2Vec, GloVe, ELMo, BERT, GPT, etc.

- Trained on large unlabeled text corpora to learn general-purpose language representations.

- Can be fine-tuned on specific tasks or used as feature extractors for other NLP models.

- Facilitate transfer learning, enabling models to be applied to various NLP tasks with limited task-specific data.

Domain-Specific Models:

- Trained in specialized text data within a particular domain (e.g., biomedical, legal, financial).

- Capture domain-specific terminology, semantics, and contextual information.

- Perform better on tasks within the targeted domain compared to general-purpose models.

(11) Specific NLP Models.

- GPT (Generative Pre-trained Transformer): Developed by OpenAI, the GPT series includes GPT-2, GPT-3, GPT-4, which are capable of language translation, question answering, and essay writing. The latest version, GPT-4, is a multimodal LLM that can respond to both text and images.

- BERT (Bidirectional Encoder Representations from Transformers): Developed by Google, it suits tasks like speech recognition, text-to-speech transformation, and is efficient in 11 NLP tasks.

- RoBERTa (Robustly Optimized BERT Approach): An optimized version of BERT, it outperforms BERT in individual tasks on the GLUE benchmark.

- ALBERT (A Lite BERT): A lighter version of BERT, it’s designed to address issues arising from increased model size.

- XLNet (eXtreme Language Understanding Network): Outperforms BERT in several NLP tasks and achieves state-of-the-art results.

- T5 (Text-to-Text Transfer Transformer): Developed by Google, it treats all NLP tasks as text-to-text problems, enabling the use of the same model for different tasks.

- PaLM (Pathways Language Model): Introduced by Google Research, it has an enormous 540 billion parameters and excels in language tasks, reasoning, and coding tasks.

- ELECTRA (Efficiently Learning an Encoder that Classifies Token Replacements Accurately): Known for its computational efficiency, it performs well with small-sized models.

- DeBERTa (Decoding-enhanced BERT with Disentangled Attention): Proposed by Microsoft Research, it includes enhancements over BERT, such as disentangled attention and enhanced mask decoding.

- ELMo (Embeddings from Language Models): Created by deep and bidirectional architecture, it’s good at capturing the context of words in sentences.

- UniLM (Unified Language Model): Developed by Microsoft Research, the bidirectional transformer architecture enables it to understand the context from both directions.

- StructBERT: An extension of BERT that incorporates language structures into pre-training, thereby improving its performance in various downstream tasks.

- SentenceTransformers: A Python framework for sentence embeddings, it can be used for more than 100 languages.

- ERNIE (Enhanced Representation through kNowledge Integration): Developed by Baidu, it’s designed to understand human language nuances and improve NLP task performance.

- CTRL (Controllable Text Generation): Introduced by Salesforce Research, it generates diverse and controlled text while allowing users to specify the style or bias.