Long Short Term Memory Network

Table Of Contents:

- Introduction To LSTM.

(1) Problem With ANN

Problem-1: Sequence Is Lost

- The problem with ANN architecture is that its design is unable to process sequential information.

- When we pass sequential information to the ANN network we have to pass everything at a time.

- When we pass every word at a time It’s sequence will be lost in the process.

- We will not be able to know which word comes after what.

Problem-2: Fixed Input Size.

- The problem with ANN is that it has a fixed input size.

- It will not be able to handle the variable length input data.

(2) Problem With RNN

Exploding / Vanishing Gradient Problem.

- When RNN came it was able to solve the issues faced by ANN networks.

- But it also has major issues.

- It has a vanishing and exploding gradient problem.

- When we do backpropagation for long sentences the gradients or product of partial derivative terms will be close to zero.

- Hence it will not be able to consider the long-term dependencies of the sentence.

- An exploding gradient issue occurs when the initial weights assigned are so high that the product of partial derivative terms will be infinite. Sometimes it will be NaN.

- These are the two issues with RNN.

(2) Core Ideas Behind LSTM

Story:

- 1000 years ago there was a state in India called Pratapgadh. And there was a king named “Vikram”. He was a very powerful king and kind also.

- There was an evil king named “Kattapa” who attacked Pratapgadh. Vikram fought bravely and he defeated “Kattapa”.

- But sadly he died in that war. Everybody in the state was sad about “Vikram”.

- After a few years “Vikram’s” elder son “Vikram Jr” became the king. He was more like “Vikram” and he has 1.5 times the quality of “Vikram”.

- “Vikram Jr” wants to take revenge on his father’s death.

- When Vikram Jr. was 20 years old he attacked “Kattapa”, He fought bravely but sadly he also died.

- Vikram Jr. also had a son, Vikram Super Jr., .

- Vikram Super Jr was not like his father or his grandfather. He was super smart.

- When he became the king, he also wanted to take revenge for his father & grandfather’s death.

- When he was 15 he attacked “Kattapa” and he used his smartness to kill “Kattapa”.

Conclusion:

- How will LSTM decide whether it’s a positive or negative sentiment?

(3) Working Principle Of LSTM

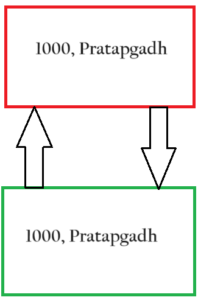

- If you pass the above story to the LSTM network it will process word by word like (1000, Pratapgadh, Vikram) etc.

- Simultaneously you are maintaining the two contexts.

- One will be the Short Term context and the other the Long Term context.

- Short-term context will keep the current important words from the story. It is like the current scene that is playing now on the screen.

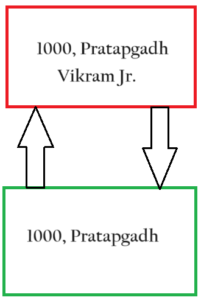

- For example, when we started telling the story we have ‘1000’ years ago there was a place called ‘Pratapgadh’.

- The short-term memory will keep the two words, ‘1000’ and ‘Pratapgadh’.

- Simultaneously you will be thinking about which words should go to the long-term memory for future processing.

- Here we see that 1000 and Pratapgadh are important words that should go to the long-term memory.

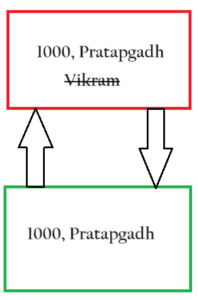

- When King ‘Vikram’ comes into the story we think he is an important person in the story and we keep it in the long-term memory.

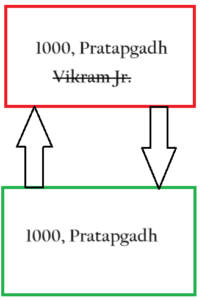

- But as the story progresses King ‘Vikram’ dies and he is not important anymore.

- Hence we removed ‘Vikram’ from the long-term memory.

- After “Vikram’s” death his son ‘Vikram Jr.’ comes into the picture. We thought he would be the hero of the story hence we added him to the long-term memory.

- After the battle with ‘Kattapa’ ‘Vikram Jr. ‘ also died. Hence we should remove him from the long-term memory.

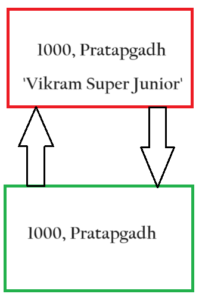

- After some time his son ‘Vikram Super Jr’ became the king of the state. And now he is the hero of the story. Hence we will add him to the long-term memory.

- Now when someone asks you how the story you decide based on your long-term memory about the story.

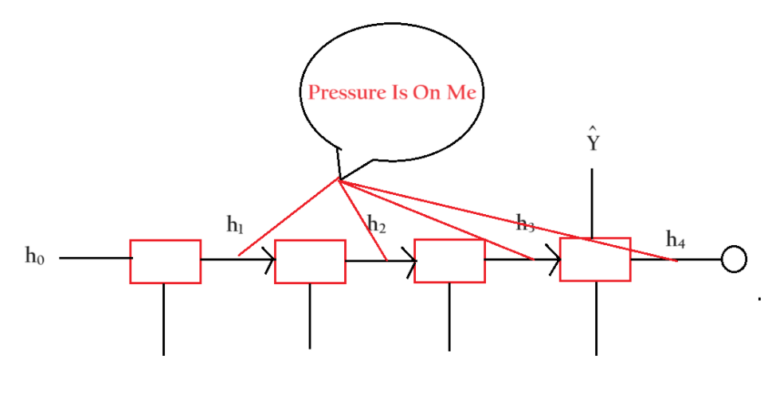

- Does the RNN have the capability to maintain the Long-term and short-term context of the sentence?

- In the case of the RNN, the entire pressure to keep track of short-term and long-term memory is on the single line which is the feedback line.

- But we see that this architecture suffers from the Vanishing/Exploding gradient problem.

- Hence computer scientists people have decided to keep two separate paths to store short-term and long-term memory.

- Suppose take an example, ‘Arpita Is A Beautiful Girl’. When ‘Arpita’ comes into the system our short-term memory tells our long-term memory that ‘Arpita’ is an important point so keep it in the long-term memory.

- When we start our next sentence our model will predict ‘he/she’ based on the word present in the long-term memory.

- If we add a new sentence, “Subrat Is A Great Guy”, now we are talking about “Subrat”. Hence we will remove ‘Arpita’ from the long-term memory and put ‘Subrat’ inside it.

- Now if we write our next sentence he/she will be decided based on ‘Subrat’ present inside long-term memory.

- Unless you remove something from the long-term memory it will be present till end.

Conclusion:

- Now the LSTM can store both the short-term and long-term values.

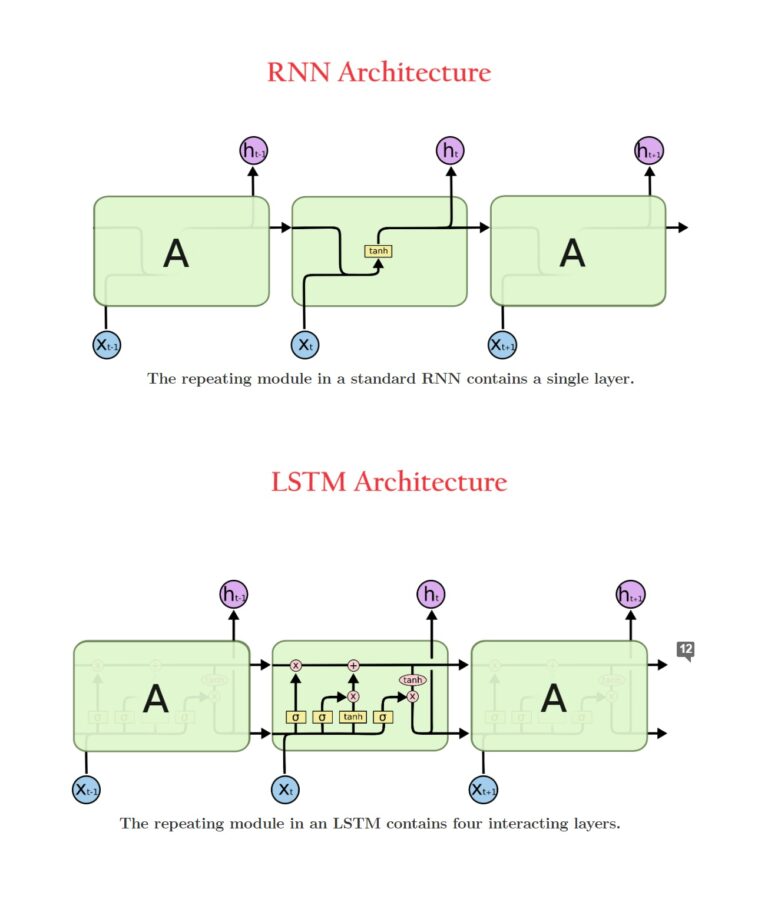

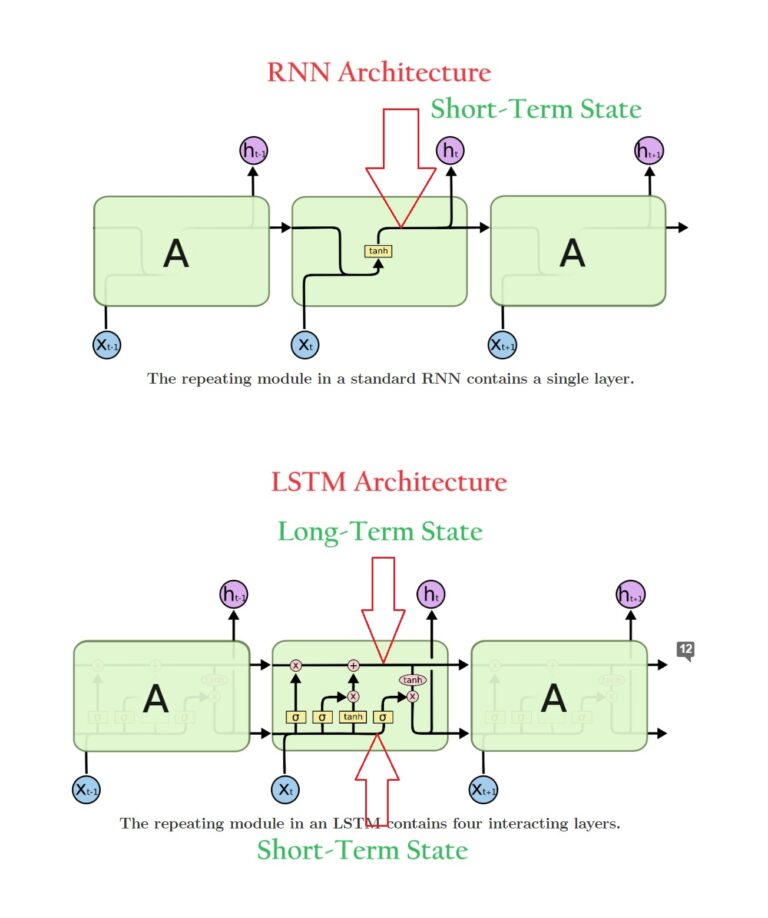

(4) Difference Between RNN & LSTM

Difference:

- In RNN it is only maintaining short-term memory but in LSTM it is maintaining both short and long-term memory.

- RNN has a simple architecture. However, LSTM has a complex architecture inside it.

- In LSTM it is not sufficient to have two separate memory layers. It is also important to make communication between them.

- When short-term memory finds something important comes from the input it will tell the long-term memory to store it.

- When short-term memory finds we need to remove something from long-term memory it will tell long-term memory to remove it.

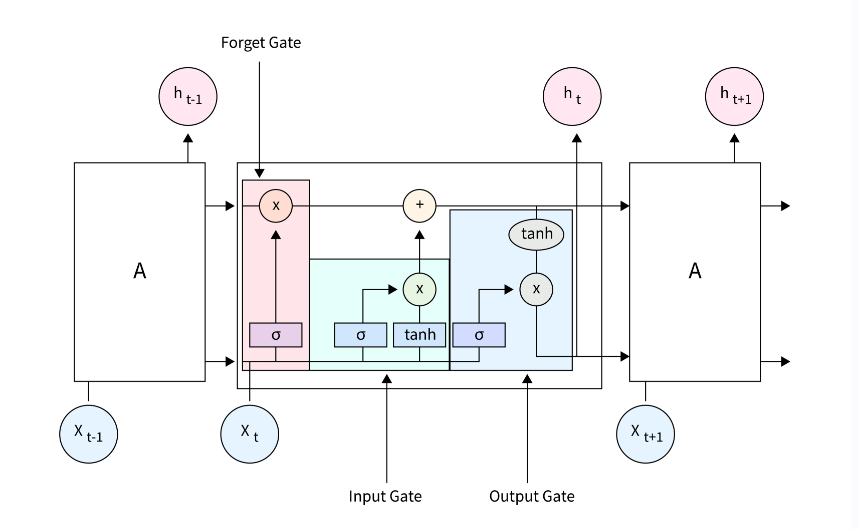

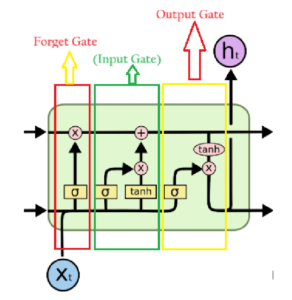

(5) Architecture Of LSTM Network.

- The biggest difference between RNN & LSTM is that RNN only maintains a single state whereas LSTM maintains two states that is short-term and long-term states.

- Let us zoom in on the LSTM architecture.

Forget Gate:

- Based on the current input and the previous short-term context it will decide which item to remove from the long-term memory.

Input Gate:

- Based on the current input it will decide which new item to add in the long-term memory.

Output Gate:

- The output gate will decide based on the items inside long-term memory.

- The output gate will give you output of that LSTM unit as well as the short-term memory output.