Architecture Of LSTM Networks

Table Of Contents:

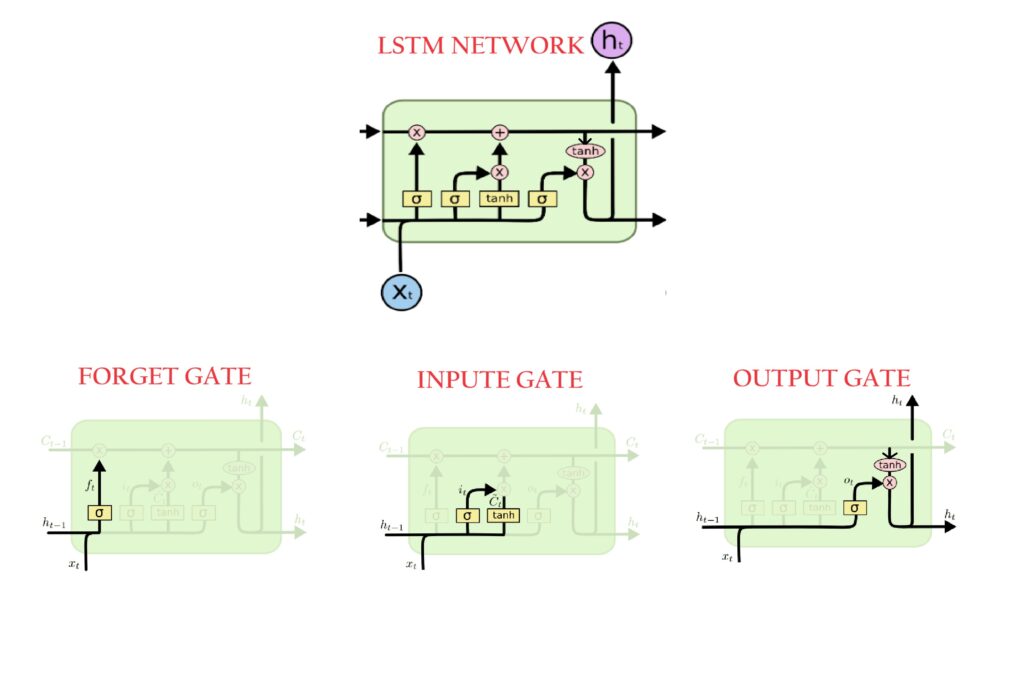

- Architectural Diagram Of LSTM Network.

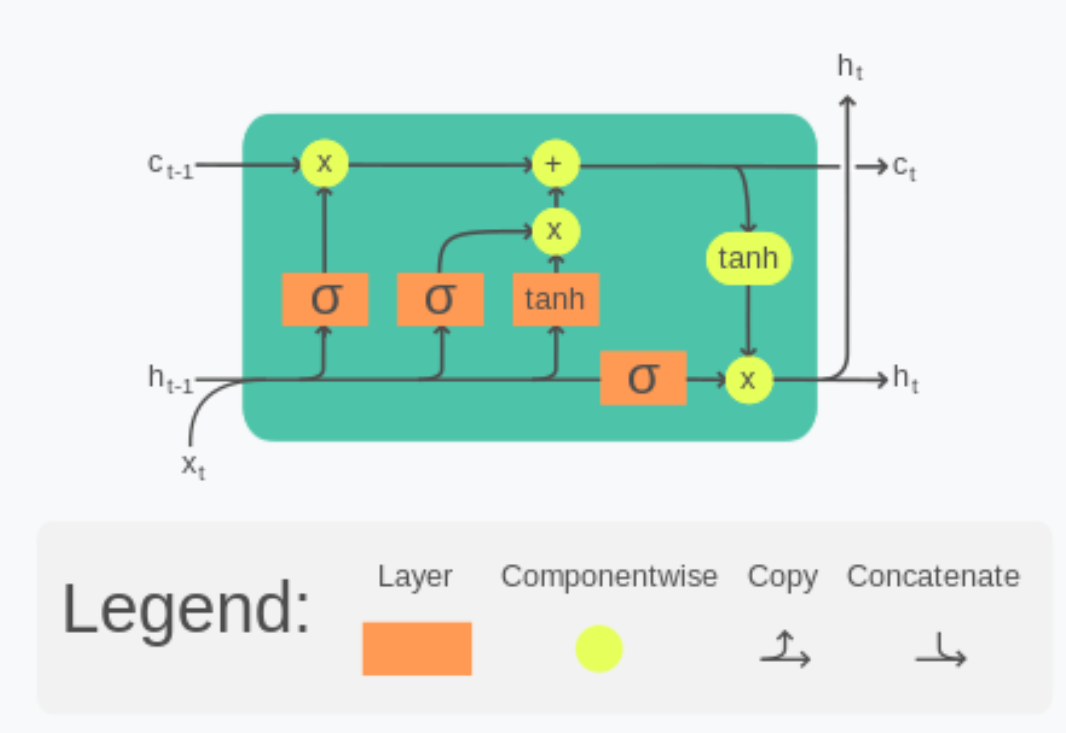

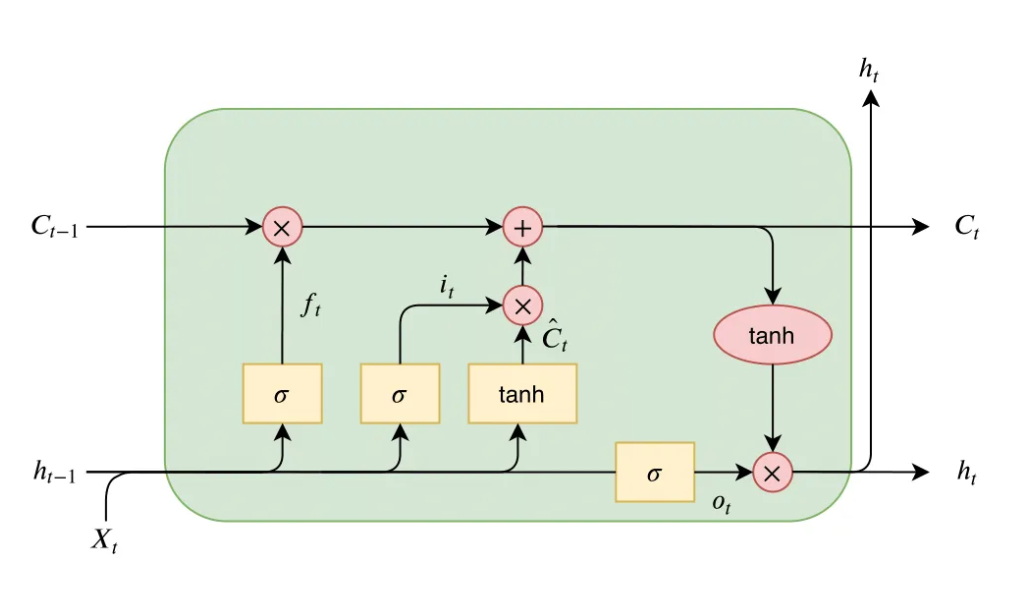

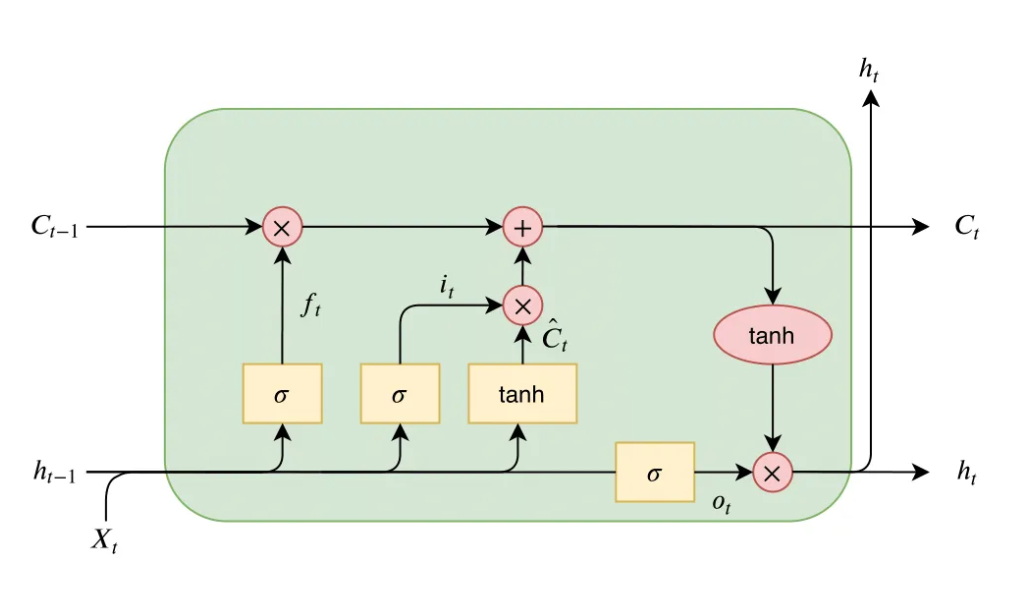

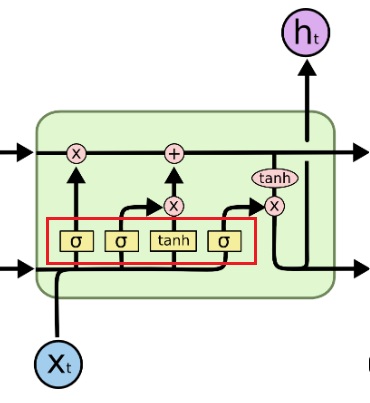

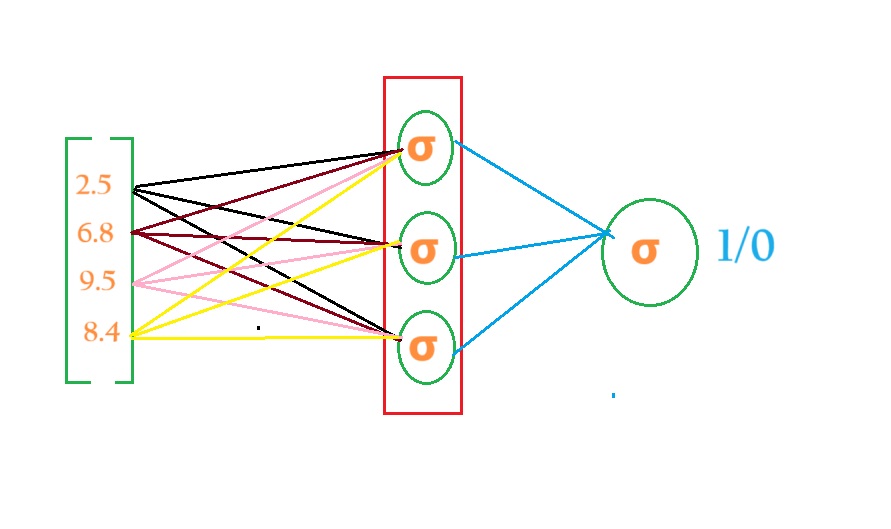

(1) Architectural Diagram Of LSTM Network.

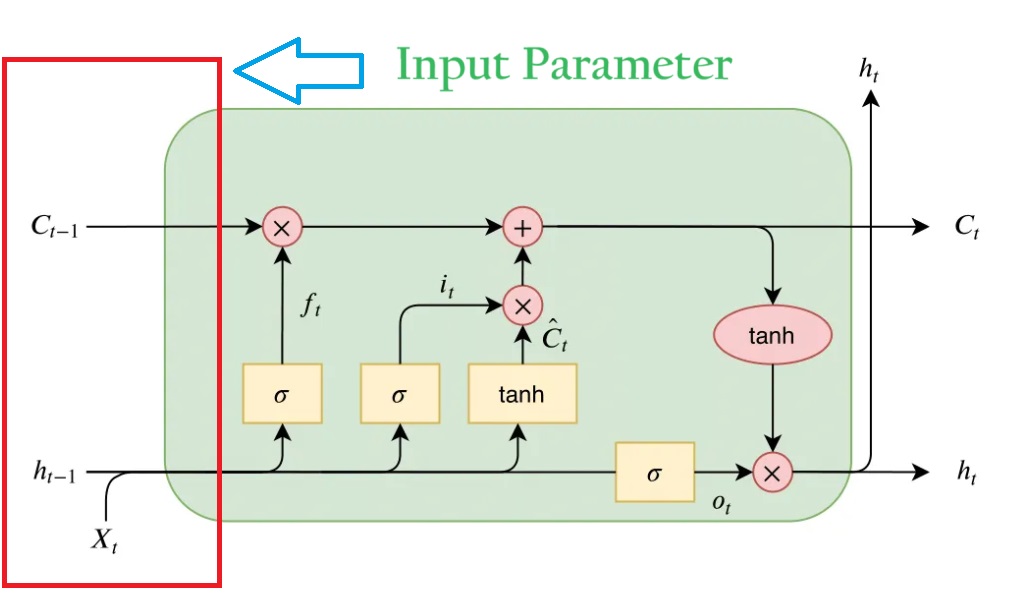

(2) Inputs For LSTM Network.

- An LSTM network takes three inputs as its parameter.

- Previous Time Stamp Cell State Value.

- Previous Time Stamp Hidden State Value.

- Current Time Stamp Input State.

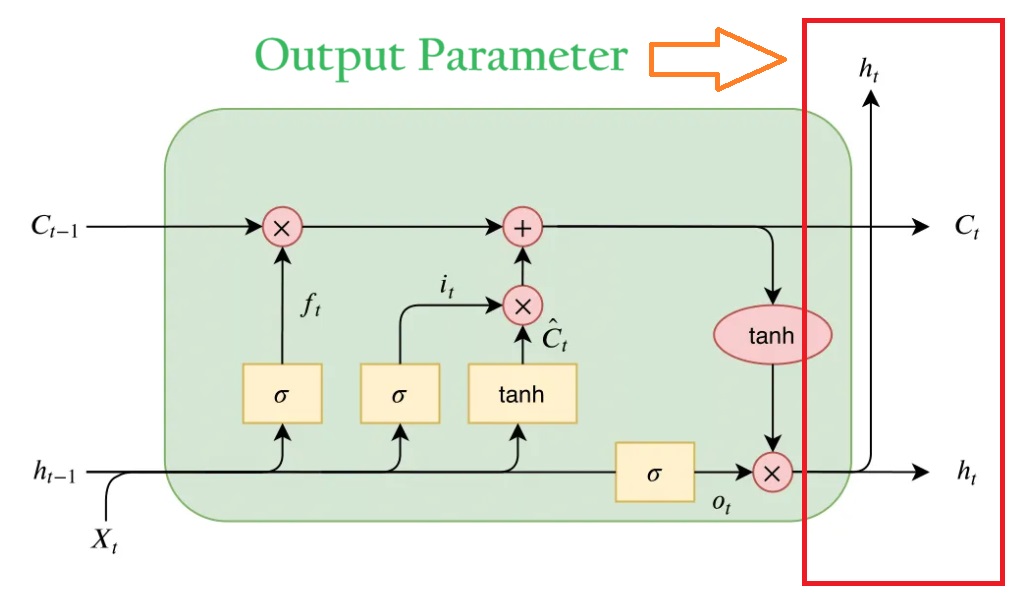

(2) Outputs For LSTM Network.

- An LSTM network has two outputs.

- Current Cell State Value.

- Current Hidden State Value.

(3) Core Idea Of LSTM Network.

- An LSTM unit is like a box whose main purpose is to store some important words and give them as an output.

- Which words to store is decided by the processing units inside it.

- Inside the LSTM network, mainly two things are happening.

- Update The Cell State.

- Calculate The Hidden State.

(4) Components Inside LSTM Network.

ht and Ct

- ht and Ct both are vectors and their dimensions are also equal.

- Example ht = [0.1, 0.9, 0.5] and Ct = [0.21, 0.59, 0.35].

Xt

- Xt is the input word that we are passing to the LSTM network. LSTM can’t understand English words we have to convert it into some vector format.

- Xt can be of any dimension.

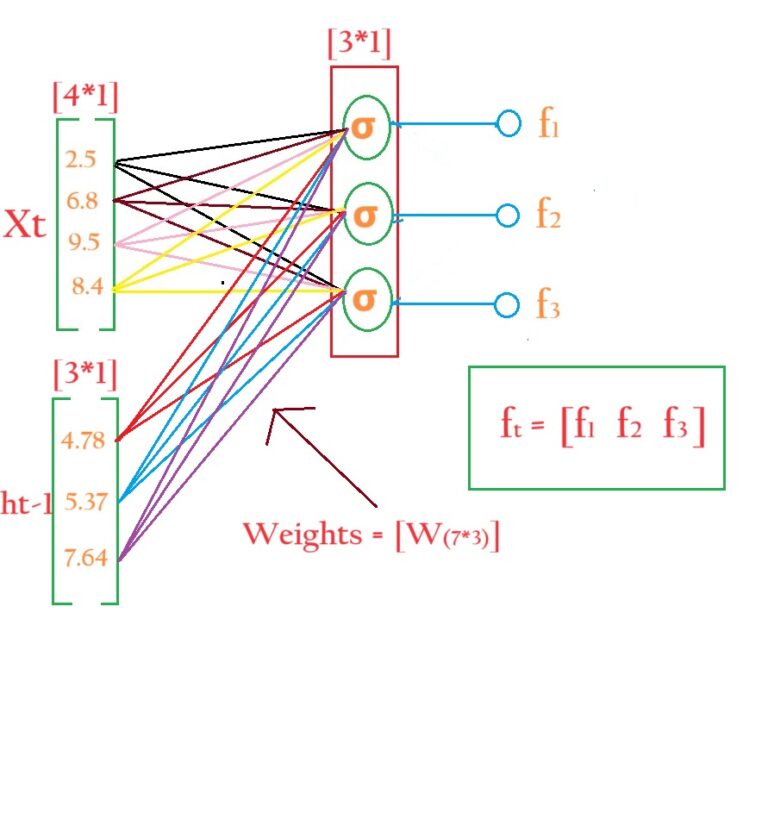

ft, It, ~Ct, Ot

- ft, It, ~Ct, Ot are the internal vectors. The dimensions of these vectors are the same as those of the ht and Ct vectors.

- For example, if ht and Ct are (3*1) vectors then ft, It, ~Ct, Ot will also be (3*1) vectors.

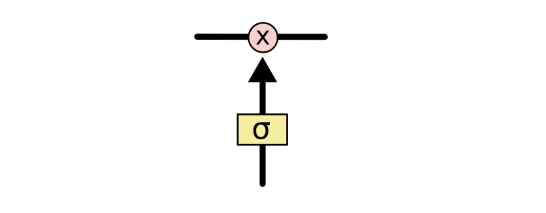

Point Wise Operation:

- Pointwise operation is done between two vectors only.

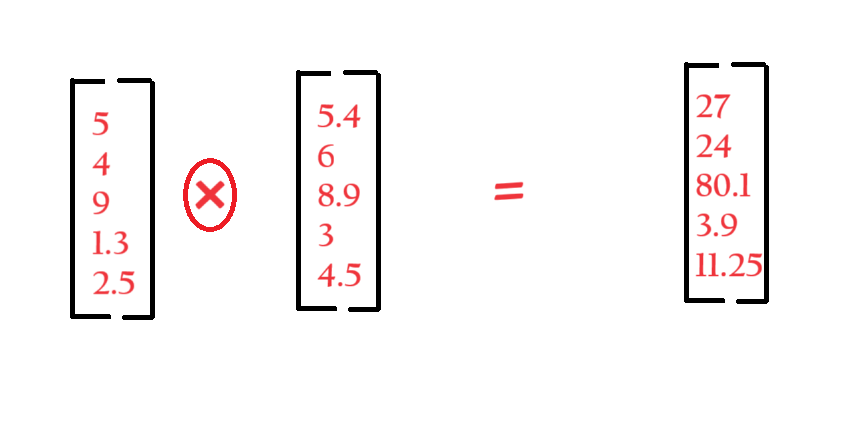

Point Wise Multiplication:

- Pointwise multiplication is like multiplying numbers between two vectors at the same position.

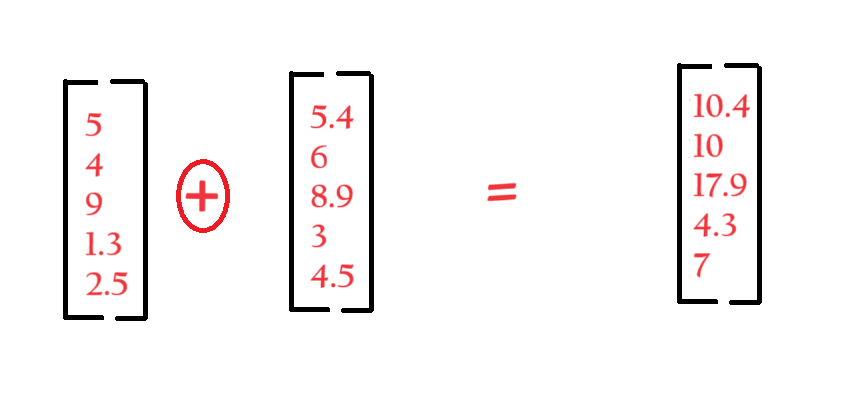

Point Wise Addition:

- Pointwise addition is like adding numbers between two vectors at the same position.

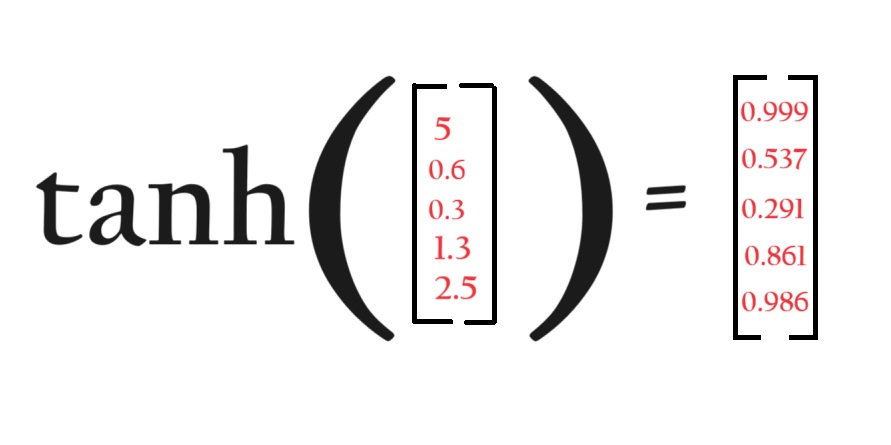

Point Wise tanh:

- Pointwise tanh is like applying the tanh function to each number of the vector.

Neural Network Layers:

- There are dense neural network layers present inside the LSTM units.

- The work of the Neural Network layer is to make decisions about which words to forget and add inside the cell state and hidden state.

- It’s like a brain inside the LSTM unit.

- The number of nodes inside the neural network is flexible, i.e. it will be decided by you. It is like a hyperparameter.

- If you decide the number of nodes to 4 it will be the same for every dense neural network layer present inside the LSTM network.

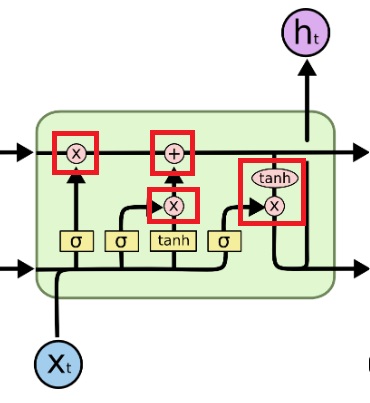

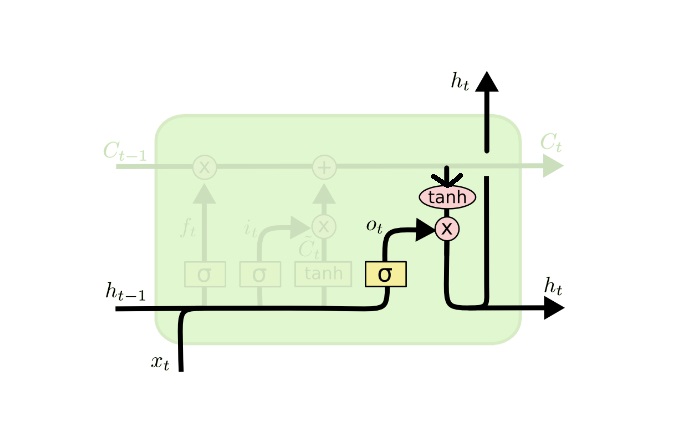

(5) GATES Inside LSTM Network.

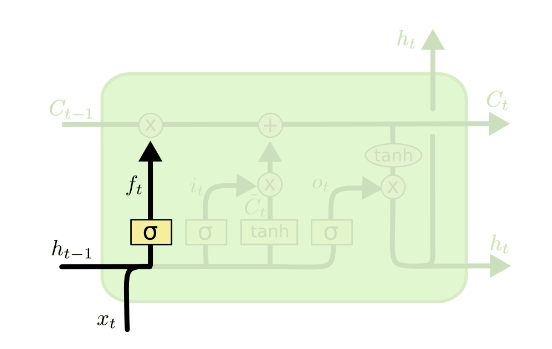

Forget Gate:

- The first step in our LSTM is to decide what information we’re going to throw away from the cell state.

- This decision is made by a sigmoid layer called the “forget gate layer.”

- It looks at ht-1 and xt and outputs a number between 0 and 1 for each number in the cell state Ct−1.

- A 1 represents “completely keep this” while a 0 represents “completely get rid of this.”

- Inside the ‘Forget Gate’ we have a dense neural network layer.

- Which will decide which word to keep inside the cell state and which word to remove from the cell state.

- The sigmoid layer outputs numbers between zero and one, describing how much each component should be let through.

- A value of zero means “let nothing through,” while a value of one means “let everything through!”

- Inside the Forget Gate, we are doing 2 operations.

- Calculate ft. (It will decide whether need to keep the word or forget it.)

- Ct-1 * ft (It will do the forget operation.)

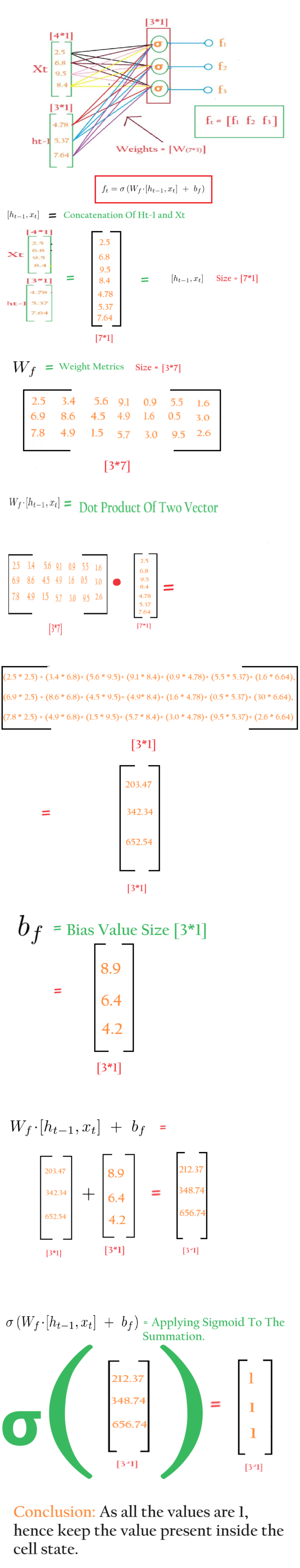

Calculate ft:

- Suppose you decide the number of neurons in the dense neural network is 3.

- Then the size of Ct-1 and ht-1 will also be 3.

- Each node inside the dense layer will give an output between 0 and 1.

- For every node, we will collect the output and form ft.

- Here ft will decide which words to keep and which to forget from the cell state.

- Suppose we got the output for ft = [0, 0.5, 1]

Calculate Ct-1 * ft:

- Ct-1 * ft is called a pointwise operation between Ct-1 and ft.

- Suppose Ct-1 = [0.82, 0.64, 0.53]

- ft = [0, 0.5, 1]

- Ct-1 * ft = [(0.82*0), (0.64*0.5), (0.53*1)] = [0, 0.32, 0.53]

- A 1 represents “completely keep this” while a 0 represents “completely get rid of this.”

Forget Gate Calculation:

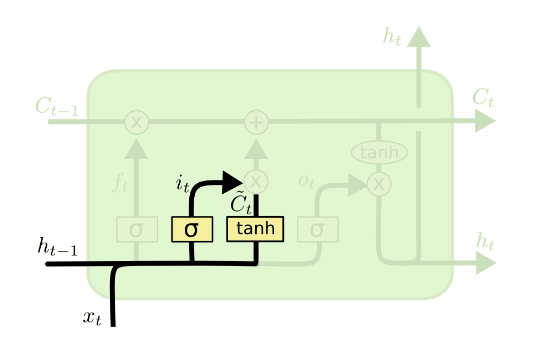

Input Gate:

- The next step is to decide what new information we’re going to store in the cell state.

- This has two parts.

- First, a sigmoid layer called the “input gate layer” decides which values we’ll update.

- Next, a tanh layer creates a vector of new candidate values, ~Ct, that could be added to the state. In the next step, we’ll combine these two to create an update to the state.

- Input Gate works in 3 stages.

- Calculate Candidate Cell State (~Ct). (The new word that may go into the cell state.)

- Calculate “it”. ( It will decide whether to store the candidate value in the cell state.)

- Calculate Ct.

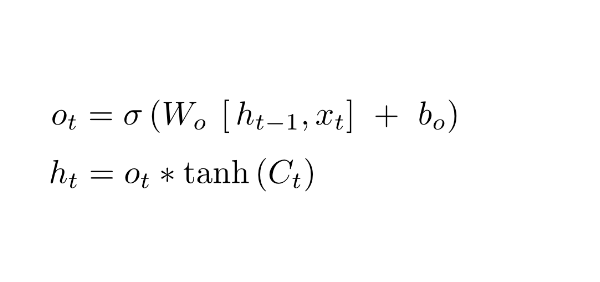

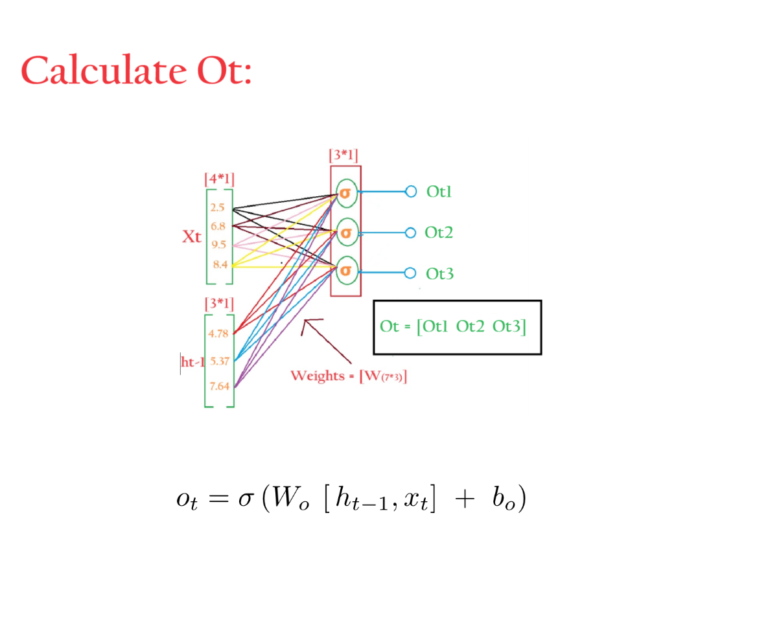

Output Gate:

- Finally, we need to decide what we’re going to output. This output will be based on our cell state but will be a filtered version.

- First, the values of the current state and previous hidden state are passed into the third sigmoid function.

- Then the new cell state generated from the cell state is passed through the tanh function (to push the values between −1 and 1).

- Both these outputs are multiplied point-by-point so that we only output the parts we decided to.

- This hidden state is used for prediction.

- The output for the current time step is dependent on the Current Cell State value.

- ht is derived from the long-term memory or cell state Ct.

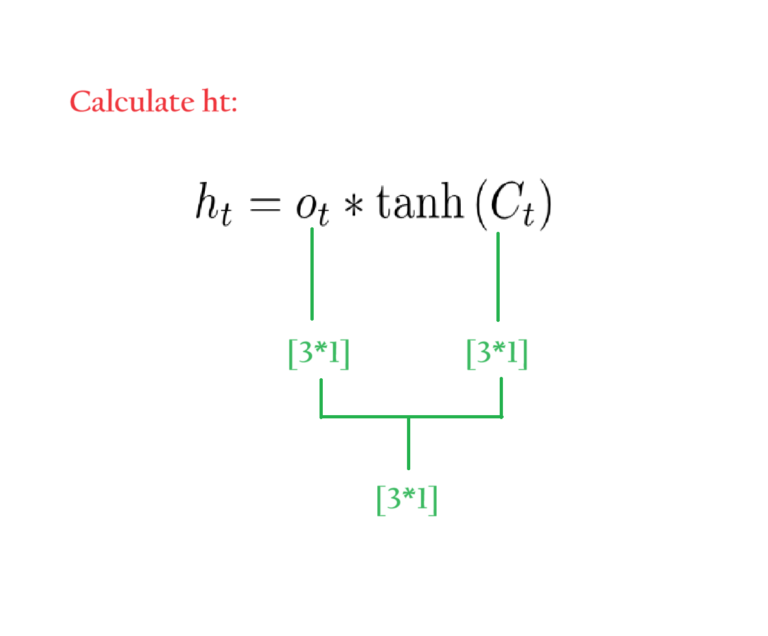

- ht is calculated in two steps.

- tanh(Ct) = Element wise tanh operation on Ct. It will bring values between [-1 1].

- Calculate Ot to apply the filter on tanh(Ct).

- Apply filter on tanh(Ct) by doing point-wise multiplication on Ot.

- Finally, the new cell state and new hidden state are carried over to the next time step.

Conclusion:

- To conclude, the forget gate determines which relevant information from the prior steps is needed.

- The input gate decides what relevant information can be added from the current step, and the output gates finalize the next hidden state.