N-Grams/Bi-Grams/Tri-Grams

Table Of Contents:

- What Is N-Gram?

- Example Of N-Gram

- Unigram.

- Bigram.

- Trigram.

- Python Example.

- Pros & Cons Of N-Gram Technique.

(1) What Is N-Gram?

- The n-gram technique is a fundamental concept in natural language processing (NLP) that involves analyzing the relationship between sequences of n consecutive words or characters within a given text.

- It is widely used in various NLP tasks, such as language modeling, text classification, and information retrieval.

(2) Example Of N-Gram

“I reside in Bengaluru”.

| SL.No. | Type of n-gram | Generated n-grams |

| 1 | Unigram | [“I”,”reside”,”in”,”Bengaluru”] |

| 2 | Bigram | [“I reside”,”reside in”,”in Bengaluru”] |

| 3 | Trigram | [“I reside in”, “reside in Bengaluru”] |

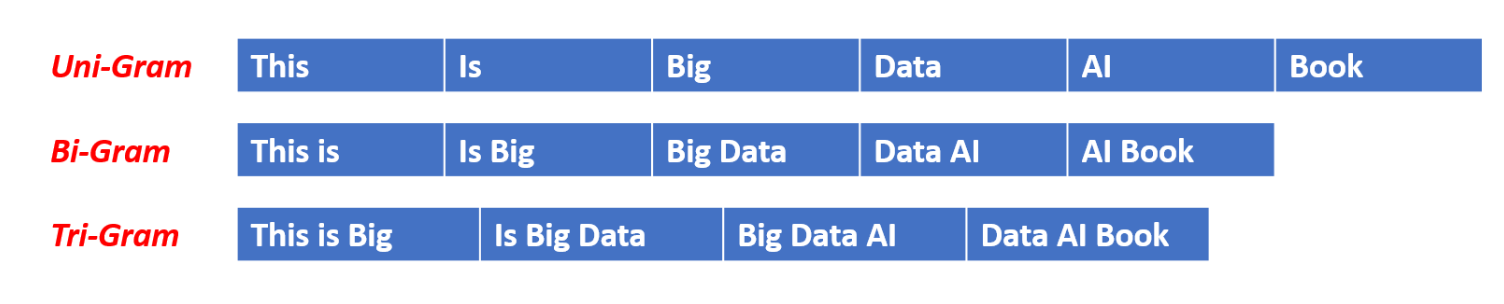

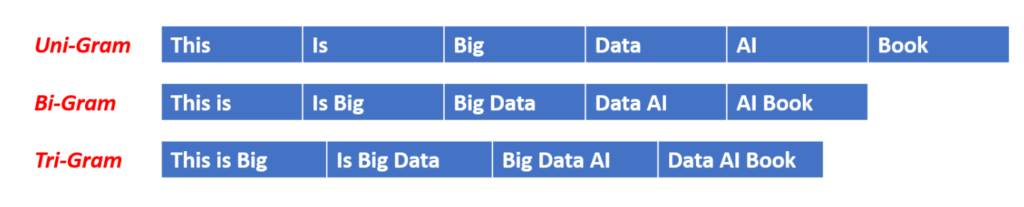

(3) Unigram

- In Unigram technique you create your vocabulary using single word only.

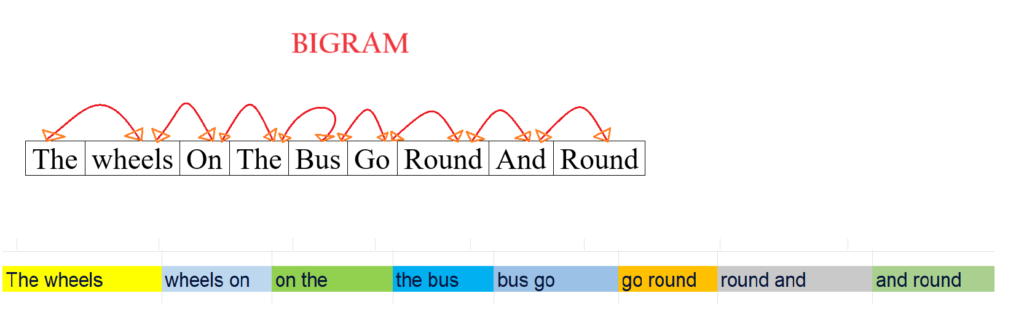

(4) Bigram

- In the Bigram technique, you create your vocabulary using two words.

(5) Trigram

- In the Trigram technique, you create your vocabulary using three words.

(6) Python Example

import pandas as pd

import numpy as npdf = pd.DataFrame({'text':['about the bird, the bird, bird, bird, bird','you heared about the bird', 'the bird is the word'], 'output':[1,1,0]})

df

from sklearn.feature_extraction.text import CountVectorizer

cv = CountVectorizer(ngram_range=(2,2))

bi_grams = cv.fit_transform(df['text'])print(cv.vocabulary_)sorted_dict = sorted(cv.vocabulary_.items(), key=lambda x: x[1])

sorted_dict

print(bi_grams[0].toarray())

print(bi_grams[1].toarray())

print(bi_grams[2].toarray())

(7) Pros & Cons Of N-Gram Technique.

Advantages:

Captures Semantic Meaning:

- Consider the example below:

- Here we have two sentences,

- (1) This movie is very good.

- (2) This movie is not good.

- Both the statements are of opposite sentiments.

- Here the vocabulary count is 6, hence we have 6 dimensional vector space.

- If we use Bag-Of-Words out of 6 dimension only 2 dimensions are different which means it is saying sentences are quite similar.

- But if we use N-gram technique out of 6 dimension 4 dimensions are different, hence sentences will be considered as different.

- Hence N-Gram techniques works effectively to capture the semantic meaning of the sentences.

Disadvantages:

Problem-1: High Dimension Issue

- When we increase from unigram to bigram to trigram the count of unique word combinations increases.

- Hence increasing the dimension of the vector.

- Which in terms takes more computer resources and slows down the algorithms.

Problem-2: Out Of Vocabulary

- It also suffers from vocabulary problems because in future if some new words come it will just ignore it because it has created a unique set of words while training.

Advantages:

Simplicity: The N-Gram technique is relatively simple to understand and implement, making it a popular choice for various NLP tasks.

Robustness: N-Grams are generally robust to noise and spelling errors in the input text, as they focus on the patterns of word sequences rather than individual words.

Language Modeling: N-Grams can be used to build effective language models, which are essential for tasks like text prediction, machine translation, and speech recognition.

Flexibility: The N-Gram technique can be applied to a wide range of NLP tasks, such as text classification, sentiment analysis, and named entity recognition.

Efficiency: N-Gram-based models can be computationally efficient, especially when compared to more complex NLP techniques.

Disadvantages:

Data Sparsity: As the value of n increases, the number of possible n-grams grows exponentially, leading to data sparsity and the need for large training datasets to effectively capture all relevant n-gram patterns.

Limited Context: N-Grams only consider a fixed-length context, which may not be sufficient for capturing the full semantic and syntactic relationships within a text.

Inability To Handle Unseen N-Grams: N-Gram-based models can struggle with handling unseen n-grams that are not present in the training data, which can limit their performance on novel or diverse text.

Sensitivity To Domain And Language: The performance of N-Gram-based models can be highly dependent on the specific domain and language of the text being analyzed, and they may not generalize well to other domains or languages.

Lack Of Deeper Understanding: While N-Grams can capture surface-level patterns in text, they do not provide a deeper understanding of the underlying meaning and context, which can be important for more advanced NLP tasks.