Softmax Activation Function

Table Of Contents:

- What Is SoftMax Activation Function?

- Formula & Diagram For SoftMax Activation Function.

- Why Is SoftMax Function Important.

- When To Use SoftMax Function?

- Advantages & Disadvantages Of SoftMax Activation Function.

(1) What Is SoftMax Activation Function?

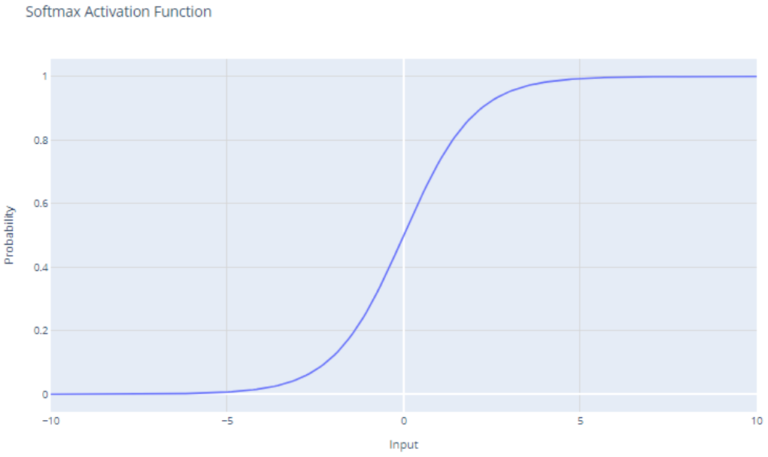

The Softmax Function is an activation function used in machine learning and deep learning, particularly in multi-class classification problems.

Its primary role is to transform a vector of arbitrary values into a vector of probabilities.

The sum of these probabilities is one, which makes it handy when the output needs to be a probability distribution.

(2) Formula & Diagram For SoftMax Activation Function.

Formula:

Diagram:

(3) Example Of SoftMax Activation Function.

- Suppose, we have the following dataset and for every observation, we have 5 features from FeatureX1 to FeatureX5 and the target variable has 3 classes.

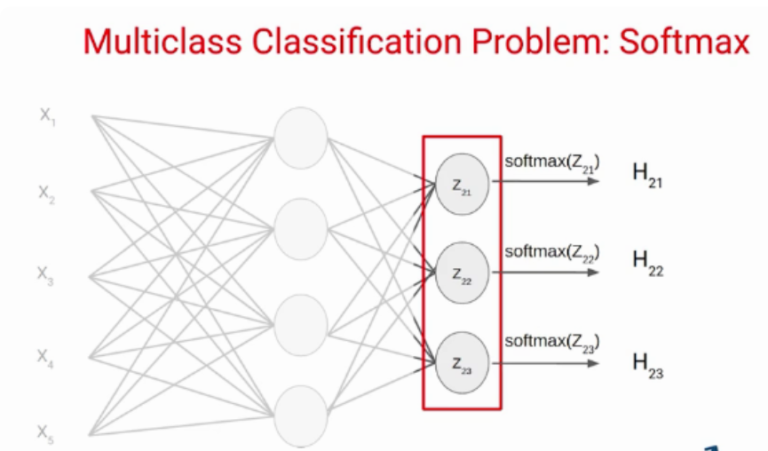

- Now let’s create a simple neural network for this problem. Here, we have an Input layer with five neurons as we have five features in the dataset.

- Next, we have one hidden layer which has four neurons. Each of these neurons uses inputs, weights, and biases here to calculate a value which is represented as Zij here.

For example, the first neuron of the first layer is represented as Z11 Similarly the second neuron of the first layer is represented as Z12, and so on.

Over these values, we apply the activation function. Let’s say a tanh activation function and send the values or result to the output layer.

The number of neurons in the output layer depends on the number of classes in the dataset. Since we have three classes in the dataset we will have three neurons in the output layer.

Each of these neurons will give the probability of individual classes. This means the first neuron will give you the probability that the data point belongs to class 1.

Similarly, the second neuron will give you the probability that the data point belongs to class 2 and so on.

Solving Using Sigmoid Function:

- Suppose we calculate the Z value using weights and biases of this layer and apply the sigmoid activation function over these values.

- We know that the sigmoid activation function gives the value between 0 and 1. suppose these are the values we get as output.

There are two problems in this case-

First, if we apply a thresh-hold of say 0.5, this network says the input data point belongs to two classes. Secondly, these probability values are independent of each other.

That means the probability that the data point belongs to class 1 does not take into account the probability of the other two classes.

This is the reason the sigmoid activation function is not preferred in multi-class classification problems.

Solving Using SoftMax Activation Function:

- Instead of using sigmoid, we will use the Softmax activation function in the output layer in the above example.

- The Softmax activation function calculates the relative probabilities. That means it uses the value of Z21, Z22, Z23 to determine the final probability value.

- Let’s see how the softmax activation function actually works. Similar to the sigmoid activation function the SoftMax function returns the probability of each class.

- Here is the equation for the SoftMax activation function.

Here, the Z represents the values from the neurons of the output layer. The exponential acts as the non-linear function. Later these values are divided by the sum of exponential values in order to normalize and then convert them into probabilities.

Note that, when the number of classes is two, it becomes the same as the sigmoid activation function. In other words, sigmoid is simply a variant of the Softmax function.

- Let’s understand with a simple example how the softmax works, We have the following neural network.

- Suppose the value of Z21, Z22, Z23 comes out to be 2.33, -1.46, and 0.56 respectively. Now the SoftMax activation function is applied to each of these neurons and the following values are generated.

- These are the probability values that a data point belonging to the respective classes. Note that, the sum of the probabilities, in this case, is equal to 1.

- In this case it clear that the input belongs to class 1. So if the probability of any of these classes is changed, the probability value of the first class would also change.

(4) Why SoftMax Activation Function Is Important.

Softmax comes into play in various machine learning tasks, particularly those involving multi-class classification.

It gives the probability distribution of multiple classes, making the decision-making process straightforward and effective.

By converting raw scores to probabilities, it not only provides a value to be worked with but also brings clarity to interpreting results.

The output from this process is another vector, but with a twist – each element in the output vector represents the probabilistic representation of the input.

The values of the output vector are in the range of 0 to 1, and the total sum of the elements will be 1.

(5) When To Use SoftMax Function?

The Softmax Function comes into its own when dealing with multi-class classification tasks in machine learning. In these scenarios, you need your model to predict one out of several possible outcomes.

Softmax is typically applied in the final layer of a neural network during the training phase, converting raw output scores from previous layers into probabilities that sum up to one.

(6) Advantages & Disadvantages Of SoftMax Function?

Advantages Of SoftMax Activation Function:

Probabilistic Interpretation

The Softmax function converts a vector of real numbers into a probability distribution, with each output value ranging from 0 to 1.

The resulting probabilities sum up to 1, which allows for a direct interpretation of the values as probabilities of particular outcomes or classes.

Suitability for Multi-Classification Problems

In machine learning, the Softmax function is widely used for multi-classification problems where an instance can belong to one of many possible classes.

It provides a way to allocate probabilities to each class, thus helping decide the most likely class for an input instance.

Works Well with Gradient Descent

The Softmax function is differentiable, meaning it can calculate the gradient of the input values.

Therefore, it can be used in conjunction with gradient-based optimization methods (such as Gradient Descent), which is essential for training deep learning models.

Stability in Numerical Computation

The Softmax function, combined with strategies like log-softmax and softmax with cross-entropy, increases numerical stability during computations, which is important in deep learning models where numerical calculations can span several orders of magnitude.

Enhances Model Generalization

Softmax encourages model to be confident about its most probable prediction, while simultaneously reducing confidence in incorrect predictions.

This helps enhance the generalization ability of the model, reducing the chances of overfitting.

Induces Real-World Decision Making

As Softmax outputs class probabilities, it provides a level of uncertainty about the model’s predictions, which closely aligns with real-world scenarios.

This is particularly useful in decision-making applications where understanding the certainty of model predictions can be critical.

Applicability Across Various Networks

The Softmax function can work effectively across numerous network structures, including convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

It’s also a crucial component of architectures like transformers used in natural language processing (NLP).

Disadvantages Of SoftMax Activation Function:

Sensitivity to Magnitudes: The softmax function is sensitive to the magnitudes of the input values, particularly the logits. Larger logits will result in higher probabilities, potentially amplifying small differences in input values. This sensitivity can make the softmax function vulnerable to numerical instability, especially when dealing with very large or small input values.

Independence Assumption: The softmax function assumes that the classes are independent of each other. It treats each class separately and disregards any potential dependencies or correlations between the classes. In certain scenarios, such as tasks with overlapping or dependent classes, this assumption may not hold and can limit the model’s performance.

Lack of Robustness to Outliers: Softmax can be sensitive to outliers in the input values. Extreme values in the logits can heavily influence the resulting probabilities, potentially skewing the classification decisions. Outliers or extreme values in the input can disrupt the balance of the probability distribution and impact the model’s robustness.

Limited Applicability: The softmax function is primarily used in multi-class classification problems. It may not be suitable or necessary for other types of tasks, such as regression or binary classification, where different activation functions (such as sigmoid or linear) are commonly used.