What Are Neural Networks?

Table Of Contents:

- Neural Networks?

- What Are Neurons?

- What Are Layers?

- Weights & Biases.

- Activation Functions.

- Feedforward and Backpropagation.

- Deep Neural Networks.

(1) Neural Networks?

- Neural networks, also known as artificial neural networks (ANNs), are computational models inspired by the structure and functioning of the human brain’s neural networks.

- They consist of interconnected artificial neurons, also called nodes or units, organized in layers.

(2) What Are Neurons?

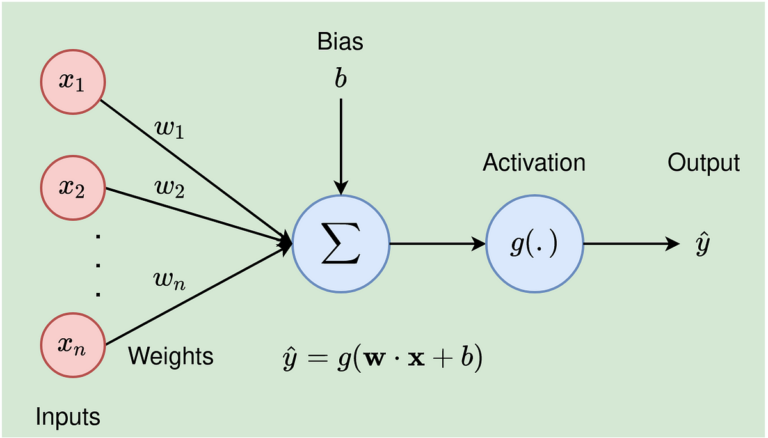

- Neurons are the basic building blocks of neural networks.

- Artificial neurons receive input signals, perform computations, and produce an output signal.

- Each neuron applies an activation function to the weighted sum of its inputs and passes the result to the next layer.

(3) What Are Layers?

- Neural networks are typically organized into layers, which are composed of multiple neurons.

- The input layer receives input data, while the output layer produces the final output or prediction.

- In between, there can be one or more hidden layers that perform intermediate computations.

(4) Weights & Biases.

- Each connection between neurons has an associated weight, which determines the strength or importance of that connection.

- Weights are adjusted during the training process to optimize the network’s performance.

- Biases are additional parameters added to each neuron that help control the activation function’s threshold.

(5) Activation Functions.

- Activation functions introduce non-linearity into the neural network, enabling it to learn complex patterns and make non-linear decisions.

- Common activation functions include sigmoid, tanh, ReLU (Rectified Linear Unit), and softmax.

(6) Feedforward and Backpropagation.

- Feedforward is the process of passing input data through the network from the input layer to the output layer.

- Backpropagation is a learning algorithm that trains neural networks by adjusting the weights and biases based on the prediction error.

- It involves computing the gradient of the error with respect to the network’s parameters and updating them using optimization techniques like gradient descent.

(7) Deep Neural Networks.

- Deep neural networks refer to neural networks with multiple hidden layers.

- Deep architectures allow for the learning of more complex and abstract representations of the input data.