What Is Self Attention ?

Table Of Contents:

- What Is The Most Important Thing In NLP Applications?

- Problem With Word2Vec Model.

- The Problem Of Average Meaning.

- What Is Self Attention?

(1) What Is The Most Important Thing In NLP Applications?

- Before understanding the self-attention mechanism, we must understand the most important thing in any NLP application.

- The answer is how you convert any words into numbers.

- Our computers don’t understand words they only understand numbers.

- Hence the researchers first worked in this direction to convert any words into vectors.

- We got some basic techniques like,

- One Hot Encoding.

- Bag Of Words’

- N-Grams

- TF/IDF

- Word2Vec

- We have already studied all these topics previously.

- The most advanced technique we use today is ‘Word2Vec’.

- But there are some basic problems involved in the ‘Word2Vec’ model.

(2) Problem With ‘Word2Vec’ Model.

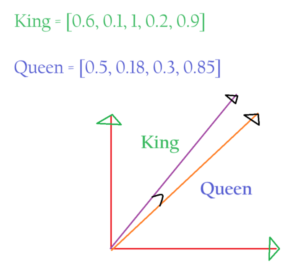

- In word embedding, we have the power to capture the semantic meaning of the word.

- Capturing the semantic meaning means capturing the meaning of the word based on the context it’s being used.

- If two words are similar, then their vector representations will also be similar.

Note:

- The word embedding is done based on other words in that sentence or corpus.

- If the word is being used in a different sentence or corpus its vector form will be different.

- Each number in the vector format represents some characteristics.

(3) The Problem Of Average Meaning.

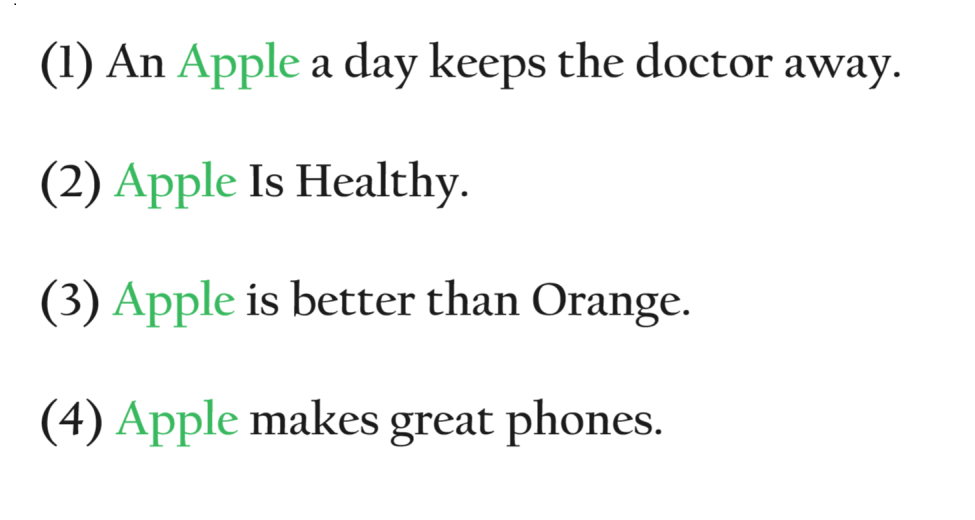

- Suppose we have the above corpus with different sentences.

- Let’s focus on the word ‘Apple’ and its word embedding vector.

- We have decided to keep our word embeddings into a 2-dimensional vector.

- The first dimension represents the test and the second dimension represents the technology part.

- The problem with Word Embedding is that it does not capture the meaning of the word, it captures the Average Meaning of the word.

- On average that particular word in your corpus used in which meaning.

- You can see in the above example ‘Apple’ being used as a fruit most of the time.

- Hence the vector representation of the ‘Apple’ will inclined towards fruit, not as a phone.

- In this 2-dimensional vector [test, technology] the test component will be more compared to the technology component.

- Let us assume in our corpus out of 10000 sentences, 9000 sentences contain an Apple as a fruit, and the rest of 1000 sentences contain an Apple as a phone.

- The overall vectors would look something like this [0.9, 0.3]. Test component = 0.9 and the Technology component is = 0.3.

Note:

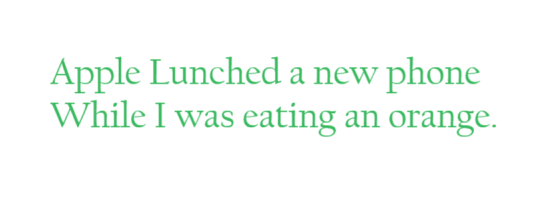

- The problem is that the ‘Word Embedding’ of that word is done once but used many times in different contexts.

- This means Word Embedding is static.

- Apple = [0.9, 0.3] We are going to use it every time, but it will fail when we use Apple as the phone in another sentence.

- Suppose we are making an NLP application which performs translation between English to Hindi.

- The first thing you will do is to convert each words into its respective embedding format.

- Embedding of Apple = [text, technology] = [0.9, 0.3].

- This is the static embedding, and it is considering Apple as fruit wherever it’s been used.

- Hence it’s the demerit of the Word2Vec embedding.

- But in our above example, Apple is used as a Phone.

- Ideally what should happen is that instead of static embedding we should have contextual embedding.

- This means the embedding value should dynamically change based on the context in which the word is being used.

- We need a “Smart Contextual Embedding” which will do embedding based on the context it’s been used.

- Hence the word embedding value will change in different contexts for the same word.

- To solve this problem we have a ‘Self Attention’ mechanism.

(4) What Is Self Attention?

- Self Attention is a mechanism where it takes static embedding as an input and generate good contextual embedding.

- Which are much better to use in any kind of application.